Tencent has open-sourced the 7 billion parameter lightweight translation models 'Hunyuan-MT-7B' and 'Hunyuan-MT-Chimera-7B,' which can translate between 33 languages, and claims that they beat existing models in benchmarks.

The development team behind Tencent's large-scale language model '

We're excited to announce the open-source release of Hunyuan-MT-7B, our latest translation model that just won big at WMT2025! ????????

— Hunyuan (@TencentHunyuan) September 1, 2025

Hunyuan-MT-7B is a lightweight 7B model that's a true powerhouse. It dominated the competition by winning 30 out of 31 language categories,… pic.twitter.com/eKUTlGXcyW

tencent/Hunyuan-MT-7B · Hugging Face

https://huggingface.co/tencent/Hunyuan-MT-7B

GitHub - Tencent-Hunyuan/Hunyuan-MT

https://github.com/Tencent-Hunyuan/Hunyuan-MT/

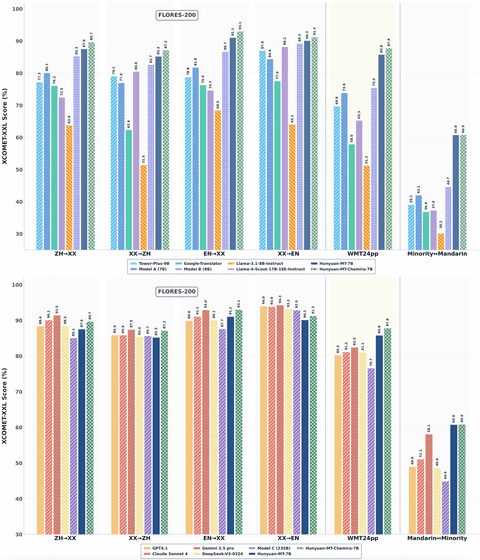

Hunyuan-MT-7B is a lightweight translation model with 7 billion parameters. Its key features are its high performance and efficiency, and it won first place in 30 of the 31 language categories it participated in at WMT 2025 , a global machine translation competition. Furthermore, in the Flores200 benchmark test, it achieved results comparable to the closed-source GPT-4.1, and is considered to have industry-leading performance among open translation models of the same size.

The Hunyuan-MT-7B supports mutual translation between 33 languages, including five Chinese minority languages. However, it is not clear whether it supports Japanese. The Hunyuan-MT-7B's efficient design allows it to process many translation requests with fewer resources, making it flexible for use in a variety of environments, from high-performance servers to small edge devices.

Hunyuan-MT-Chimera-7B is the industry's first open-source translation ensemble model. This model does not translate directly, but rather integrates and refines multiple translation results to produce higher-quality translations. This is expected to raise translation quality to a new level, contributing to improved accuracy, especially in specialized fields.

In addition to the standard model, Hunyuan-MT-7B and Hunyuan-MT-Chimera-7B also provide a quantized model in FP8 format, which can improve inference efficiency and lower the barrier to deployment.

The paper introduces a method for fine-tuning the model using a framework called LLaMA-Factory . Regarding deployment, it supports major inference frameworks such as ' TensorRT-LLM ,' ' vLLM ,' and ' SGLang .' Ready-to-use Docker images are also provided, making it relatively easy for developers to deploy the model in their own environment and start using it.

Hunyuan-MT-7B and Hunyuan-MT-Chimera-7B are released as open source models under a unique license called the 'Tencent Hunyuan Community License.' This license is based on Apache 2.0 and allows for research and commercial use, but any service using the model with more than 100 million monthly active users must obtain a separate license from Tencent. Furthermore, use is restricted in some regions, including the European Union, the United Kingdom, and South Korea.

The Hunyuan-MT-7B and Hunyuan-MT-Chimera-7B models are available for free download on Hugging Face, GitHub, and ModelScope.

Hunyuan-MT - a tencent Collection

https://huggingface.co/collections/tencent/hunyuan-mt-68b42f76d473f82798882597

GitHub - Tencent-Hunyuan/Hunyuan-MT

https://github.com/Tencent-Hunyuan/Hunyuan-MT/

Hunyuan-MTcollection information-coming from Tencent-Hunyuan · Demon Tower Community

https://modelscope.cn/collections/Hunyuan-MT-2ca6b8e1b4934f

Related Posts:

in Software, Posted by log1i_yk