Alibaba announces 'Qwen3-Coder', an open source coding model comparable to Claude Sonnet 4

The research team behind Alibaba's large-scale language model '

Qwen3-Coder: Agentic Coding in the World | Qwen

https://qwenlm.github.io/blog/qwen3-coder/

GitHub - QwenLM/Qwen3-Coder: Qwen3-Coder is the code version of Qwen3, the large language model series developed by Qwen team, Alibaba Cloud.

https://github.com/QwenLM/Qwen3-Coder

The main feature of Qwen3-Coder is that it not only natively supports a vast context length of 256K tokens, but also supports up to 1 million tokens by using an expansion technology called 'YaRN (Yet another RoPE-based scaling method)'. This makes it possible to handle large-scale tasks such as understanding the entire repository. It also supports 358 diverse programming languages, including C++, Java, Python, Ruby, Swift, ABAP , etc. In addition, it is specialized for coding while maintaining general capabilities and mathematical capabilities from the base model.

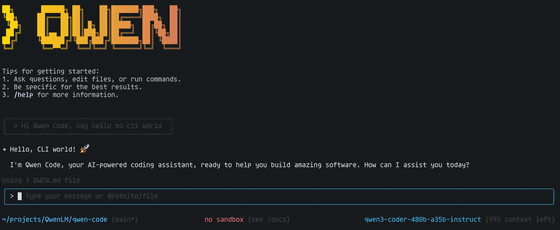

In order to maximize its capabilities, the command line tool for agent coding, Qwen Code, has also been open-sourced. Qwen Code is a fork of Gemini Code.

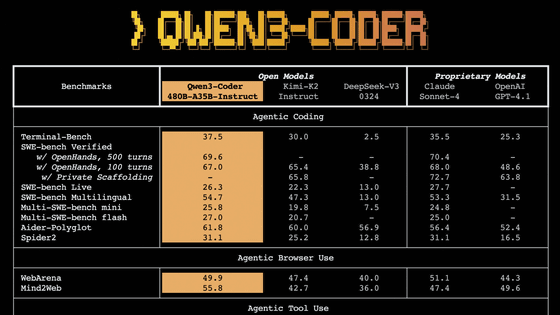

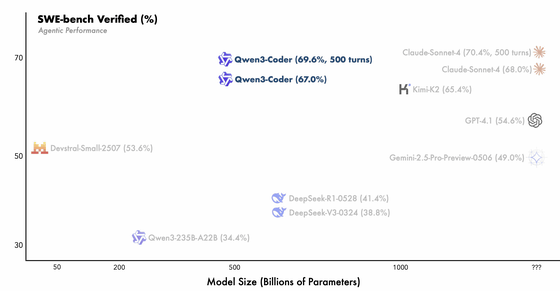

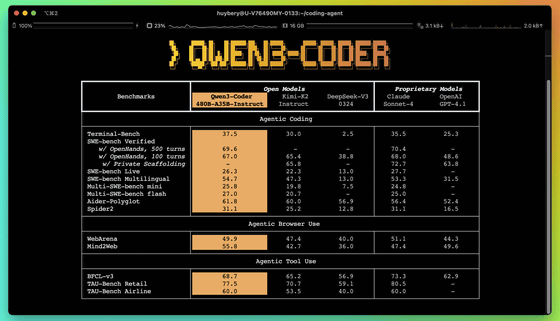

In terms of performance, Alibaba claims that Qwen3-Coder has set new state-of-the-art records among open source models in the areas of agent coding, agent browser operation, and agent tool usage. In

According to Alibaba, a huge amount of data, 7.5 trillion tokens, 70% of which is code, was used in the pre-training stage. In addition, by using Qwen2.5-Coder to clean up and rewrite noisy data, the quality of the entire data was significantly improved.

In the post-training phase, two important reinforcement learning (RL) techniques were introduced on a large scale. First, code reinforcement learning (Code RL) was applied to a variety of real-world coding tasks, significantly improving the success rate of code execution. In addition, long-term reinforcement learning was introduced to handle tasks that require multi-turn interactions, such as SWE-Bench. This was achieved by utilizing Alibaba Cloud's infrastructure, building a highly scalable system that can run approximately 20,000 independent environments in parallel.

There are multiple models of Qwen3-Coder, but at the time of writing, 'Qwen3-Coder-480B-A35B-Instruct' is available on Hugging Face and ModelScope . In addition, the FP8 quantized model 'Qwen3-Coder-480B-A35B-Instruct-FP8', which contributes to the efficiency of inference, is also available on Hugging Face and ModelScope . In addition, the API of Qwen3-Coder can be accessed directly via Alibaba Cloud Model Studio .

Related Posts:

in Software, Posted by log1i_yk