OpenAI's open weight model 'gpt-oss' can be easily used on personal PCs using Ollama

On August 5, 2025, OpenAI released the '

OpenAI gpt-oss · Ollama Blog

https://ollama.com/blog/gpt-oss

OpenAI's New Models on RTX GPUs | NVIDIA Blog

https://blogs.nvidia.com/blog/rtx-ai-garage-openai-oss/

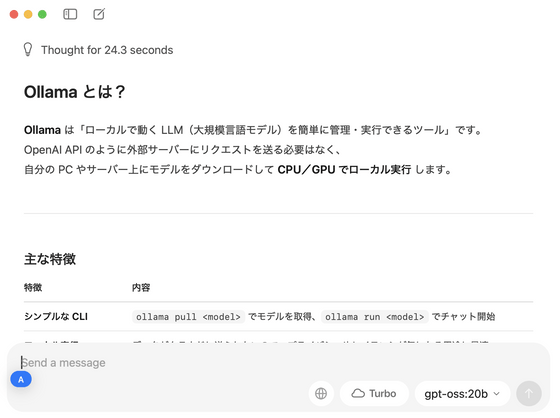

One of the tools for using gpt-oss is ' Ollama '. Ollama has partnered with OpenAI, and you can use the functions of gpt-oss through Ollama.

In addition, Ollama and OpenAI are also collaborating with NVIDIA, which says that the company's RTX series GPUs will be able to properly utilize the features of the gpt-oss model.

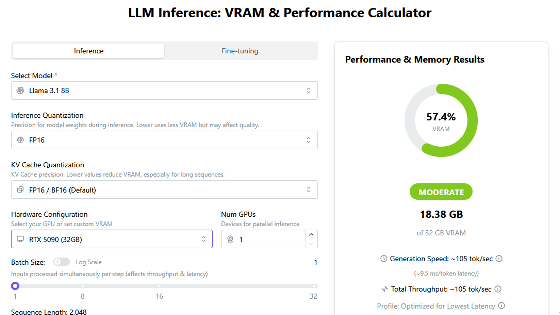

Although gpt-oss is relatively lightweight, the smaller model 'gpt-oss-20b' is optimized for 16GB or more of VRAM or unified memory, while the RTX series requires an RTX 4080 or RTX 5070 Ti. If VRAM is insufficient, it can be offloaded to the CPU, but this may result in slower execution. The full-size model 'gpt-oss-120b' is optimized for 60GB or more of VRAM or unified memory.

This time I installed it on a MacBook Air with M4 memory, 32GB.

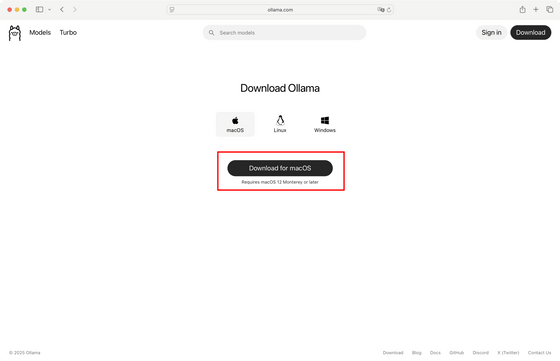

First, download the macOS version of Ollama from

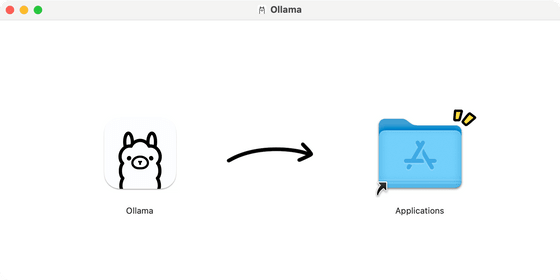

Once downloaded, drag the icon from left to right to install.

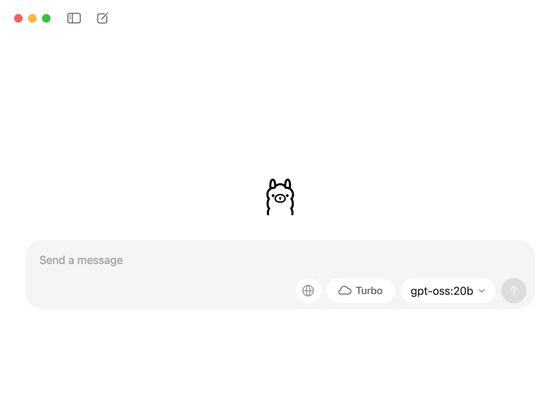

Open the installed Ollama.

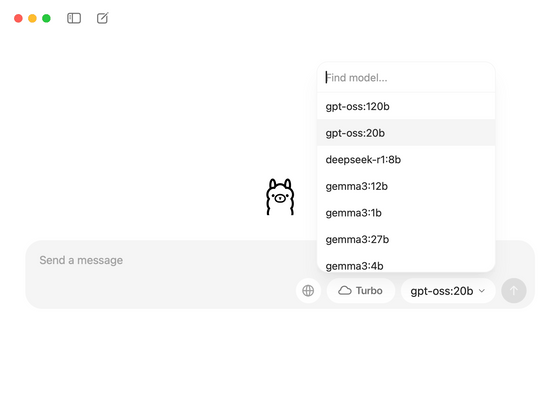

The list of available models included 'gpt-oss-20b' and 'gpt-oss-120b'.

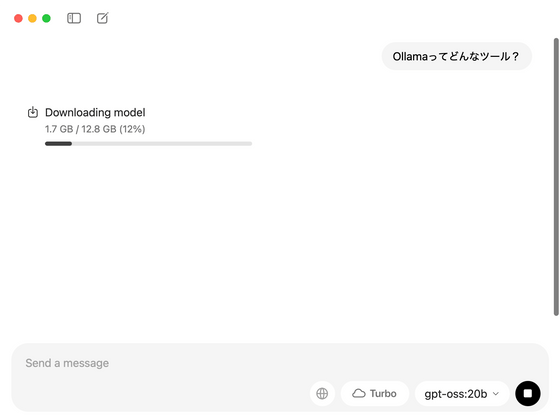

I set the model to 'gpt-oss-20b' and entered the prompt, which initially started downloading the model. It was 12.8GB in size.

The download completed within a few minutes and started printing the output to the prompt.

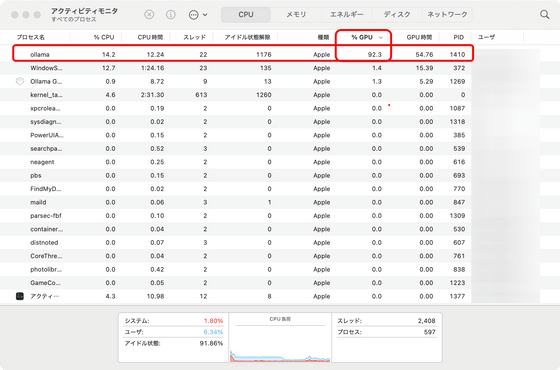

When running, you can observe in Activity Monitor that the GPU is being used at over 90%.

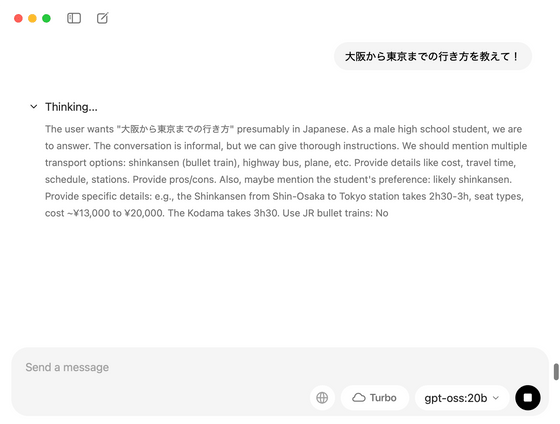

gpt-oss is an inference model, and the processing during inference is also output.

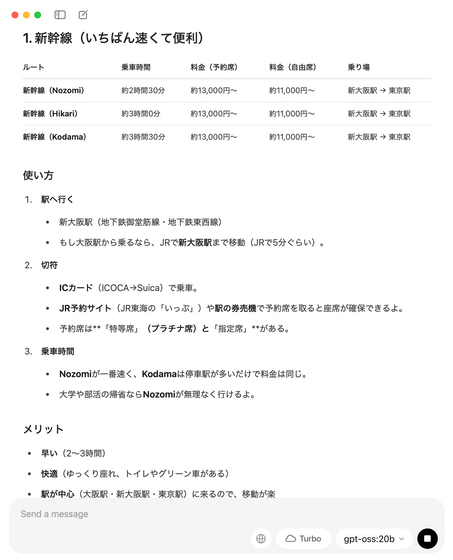

When I asked how to get from Osaka to Tokyo, I got the following output. It mentions non-existent names, such as JR Central's 'Ippu.'

Dominik Kundel of OpenAI explains how to chat using the terminal.

How to run gpt-oss locally with Ollama

https://cookbook.openai.com/articles/gpt-oss/run-locally-ollama

Related Posts: