NVIDIA Announces Rubin CPX AI Chip, Optimized for Millions of Tokens Input to Handle Video Generation and Large-Scale Coding Tasks

NVIDIA announced Rubin CPX , a GPU specialized for AI processing that can handle inputs of millions of tokens, on September 9, 2025. Rubin CPX is touted as being able to significantly exceed the design limits of current systems in tasks such as generating long videos and coding tasks that involve processing long contexts.

NVIDIA Unveils Rubin CPX: A New Class of GPU Designed for Massive-Context Inference | NVIDIA Newsroom

NVIDIA Rubin CPX Accelerates Inference Performance and Efficiency for 1M+ Token Context Workloads | NVIDIA Technical Blog

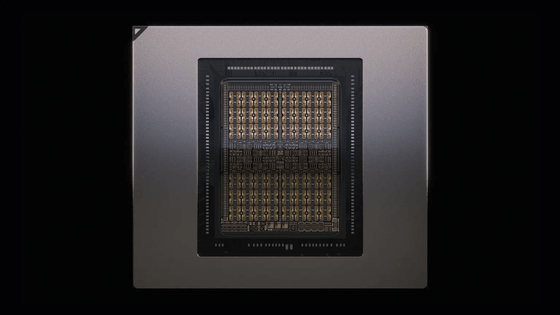

According to NVIDIA, AI models require up to 1 million tokens per hour of video processing, approaching the limits of conventional GPU computing. The newly announced Rubin CPX pushes these limits by integrating a video decoder, encoder, and long-context inference processing onto a single chip, enabling unprecedented capabilities for applications such as video search and high-quality generated video.

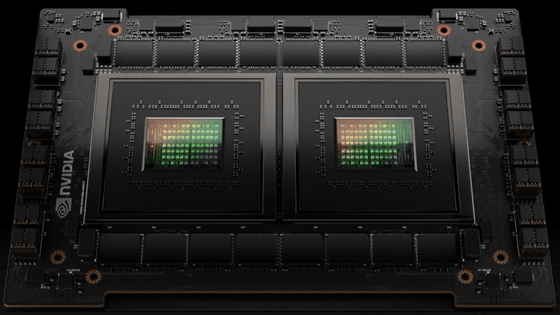

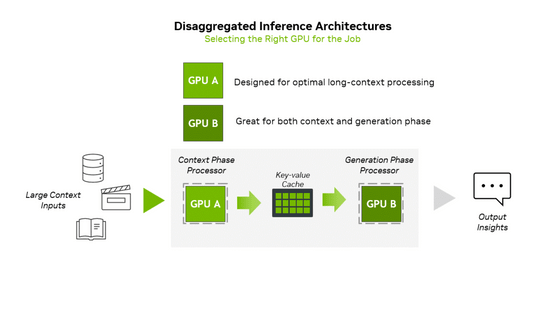

Rubin CPX is a chip designed to support a 'distributed inference' approach to complex AI processing. AI inference typically consists of two distinct phases: the context phase and the generation phase. The context phase, which takes in and analyzes large amounts of input data to generate the initial token output results, requires high-throughput processing. Meanwhile, the generation phase relies on high-speed memory transfers and high-speed interconnects such as NVLink to maintain token-level output performance. Distributed inference processes these phases independently, enabling targeted optimization of both compute and memory resources. Rubin CPX addresses these processing needs.

Rubin CPX also uses NVIDIA's

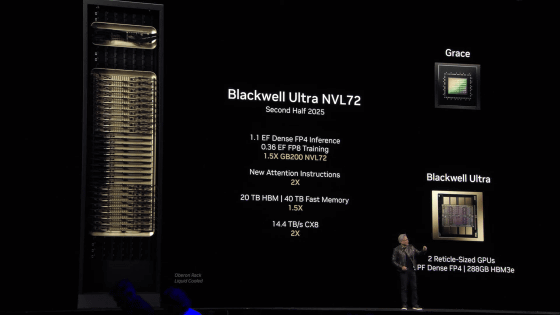

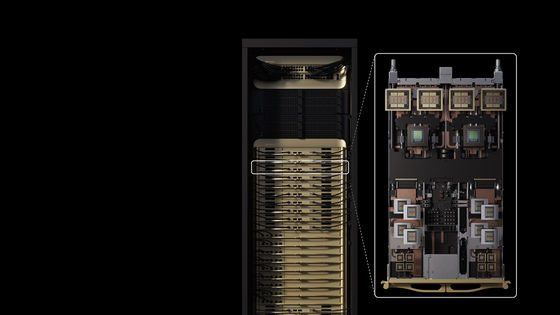

Additionally, the company also announced the NVIDIA Vera Rubin NVL144 CPX platform, which combines 8 exaflops of AI computing power and 100TB of high-speed memory in a single rack. The Rubin CPX works in conjunction with the NVIDIA Vera CPU and Rubin GPU within this platform, achieving 7.5 times the AI performance of the existing NVIDIA GB300 NVL72 system.

'The Vera Rubin platform marks a new leap forward in the state-of-the-art of AI computing with the introduction of the next generation of Rubin GPUs and Rubin CPX processors, a new category of processors,' said Jensen Huang, CEO of NVIDIA. 'Just as the RTX series revolutionized graphics and physics AI, Rubin CPX delivers unparalleled performance for massive contextual processing, simultaneously inferring knowledge on the scale of millions of tokens.'

Rubin CPX is expected to begin offering in late 2026.

Related Posts:

in Hardware, Posted by log1p_kr