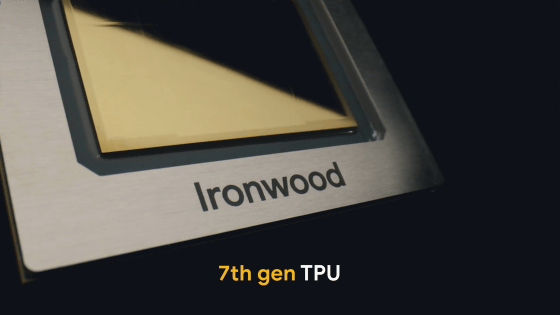

Google announces 7th generation TPU 'Ironwood' optimized for processing inference models, with 192GB of memory per chip and performance per pod more than 24 times that of the strongest supercomputer 'El Capitan'

Google is developing its own AI processing processor,

Ironwood: The first Google TPU for the age of inference

https://blog.google/products/google-cloud/ironwood-tpu-age-of-inference/

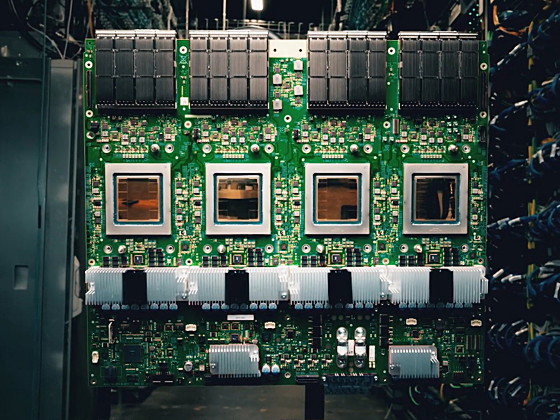

Here's what Ironwood looks like: Ironwood is optimized for large-scale language models that use Mixture of Experts (MoE) and inference models that can perform advanced inference tasks.

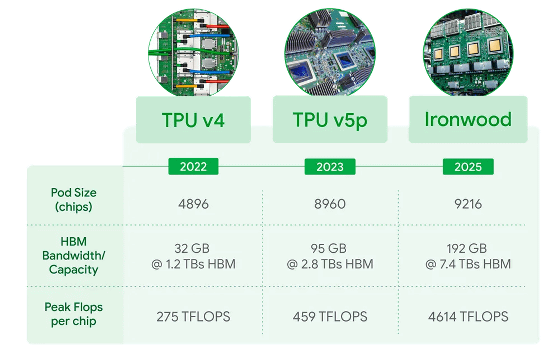

High-speed processing of MoE models and inference models requires large-scale parallel processing and efficient memory access. Ironwood has 192GB of HBM with a bandwidth of 7.4Tbps per chip, and the processing performance per chip is 4614TFLOPS. In addition, up to 9216 Ironwood chips can be installed in one pod, and the processing performance per pod reaches 42.5E (exa) FLOPS. Google claims that 'The processing performance of

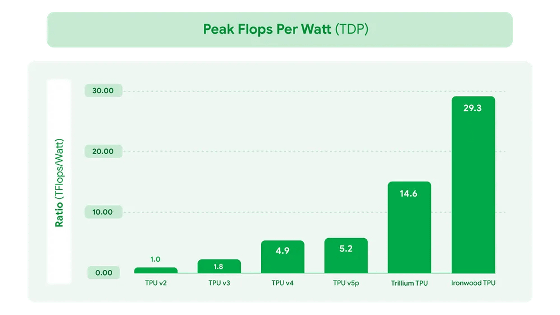

Below is a graph showing the 'processing performance per 1W' of each generation of TPU. Ironwood has twice the processing performance per 1W compared to

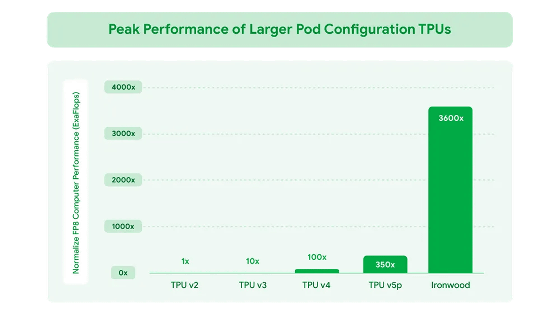

Below is a graph comparing the performance per pod of each generation of TPU. Ironwood delivers more than 10 times the performance of

Ironwood is expected to be available in late 2025.

Related Posts:

in Hardware, Posted by log1o_hf