AMD announces AI GPU 'Instinct MI350 series', with 288GB memory capacity and higher performance than NVIDIA's B200

AMD announced the Instinct MI350 series , a high-performance GPU for AI infrastructure, on Thursday, June 12, 2025. AMD is promoting the Instinct MI350 series as being more powerful than NVIDIA's

AMD Instinct™ MI350 Series GPUs

https://www.amd.com/en/products/accelerators/instinct/mi350.html#tabs-ee243cf344-item-b81b4672ea-tab

AMD Instinct MI350 Series and Beyond: Accelerating the Future of AI and HPC

https://www.amd.com/en/blogs/2025/amd-instinct-mi350-series-and-beyond-accelerating-the-future-of-ai-and-hpc.html

AMD Advancing AI Keynote - YouTube

CEO Lisa Su unveiling the Instinct MI350 series.

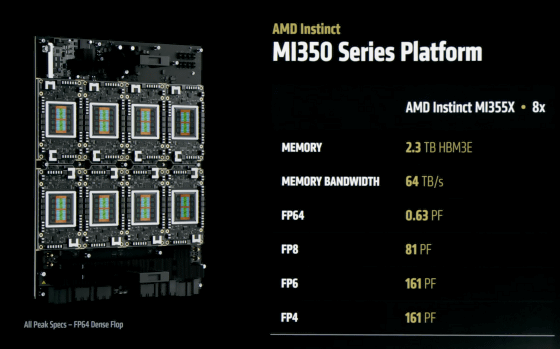

This is what it looks like when installed on the board.

The Instinct MI350 series includes the Instinct MI355X and the Instinct MI350X . The higher-end model, the Instinct MI355X, uses HBM3E memory, with a memory capacity of 288GB and a memory bandwidth of 8TB per second.

The specifications of the Instinct MI355X and Instinct MI350X are as follows.

| Product name | Instinct MI355X | Instinct MI350X |

|---|---|---|

| GPU | Instinct MI355X OAM | Instinct MI350X OAM |

| GPU Architecture | CDNA 4 | CDNA 4 |

| Memory capacity | 288GB HBM3E | 288GB HBM3E |

| Memory Bandwidth | 8 TB/s | 8 TB/s |

| FP64 Performance | 78.6 TFLOPS | 72 TFLOPS |

| FP16 Performance | 5 petaflops | 4.6 Petaflops |

| FP8 Performance | 10.1 Petaflops | 9.2 Petaflops |

| FP6 Performance | 20.1 Petaflops | 18.45 petaflops |

| FP4 Performance | 20.1 Petaflops | 18.45 petaflops |

Eight Instinct MI350 series can be combined to form an Instinct MI350 series platform, and eight Instinct MI355X can be combined to reach a memory capacity of 2.3TB.

The specifications of the Instinct MI355X platform and the Instinct MI350X platform are as follows:

| Product name | Instinct MI355X Platform | Instinct MI350X Platform |

|---|---|---|

| GPU | Instinct MI355X OAM 8 units | Instinct MI350X OAM 8 units |

| GPU Architecture | CDNA 4 | CDNA 4 |

| Memory capacity | 2.3TB HBM3E | 2.3TB HBM3E |

| Memory Bandwidth | 8 TB/s per unit | 8 TB/s per unit |

| FP64 Performance | 628.8 TFLOPS | 577 TFLOPS |

| FP16 Performance | 40.2 petaflops | 36.8 petaflops |

| FP8 Performance | 80.5 petaflops | 73.82 petaflops |

| FP6 Performance | 161 petaflops | 147.6 petaflops |

| FP4 Performance | 161 petaflops | 147.6 petaflops |

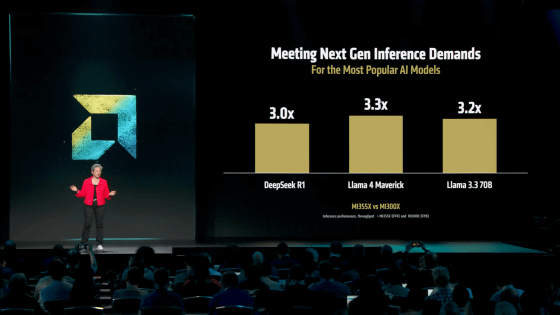

The Instinct MI355X offers significant performance improvements over the Instinct MI300X that was released in 2023, achieving 3x faster inference on DeepSeek-R1, 3.3x faster on Llama 4 Maverick, and 3.2x faster on Llama 3.3 70B.

AMD is promoting the Instinct MI350 series as having higher performance than NVIDIA's GB200 and B200. AMD says that the Instinct MI350 series has 1.6 times the memory capacity and 2.1 times the processing performance in FP64 compared to the B200.

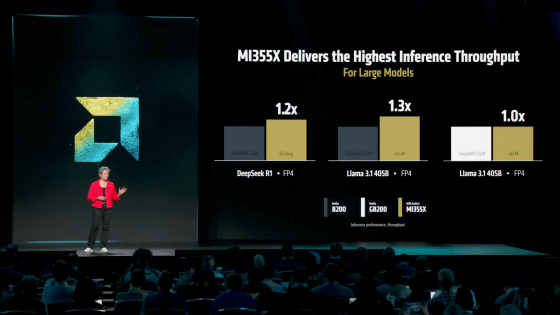

In real-world AI workloads, the Instinct MI355X delivers 1.2x the inference performance of DeepSeek-R1 and 1.3x the inference performance of Llama 3.1 405B compared to the B200.

The Instinct MI350 series will be available through services from Dell, HPE, Supermicro and others.