It turns out that chatbots were engaging in inappropriate and harmful interactions with underage users, such as sex, self-harm, and drugs, and some even used the names of celebrities

An investigation by a nonprofit organization has revealed that chatbots on

HEAT_REPORT_CharacterAI_FINAL_PM_29_09_25.pdf

(PDF file) https://heatinitiative.org/wp-content/uploads/2025/08/HEAT_REPORT_CharacterAI_FINAL_PM_29_09_25.pdf

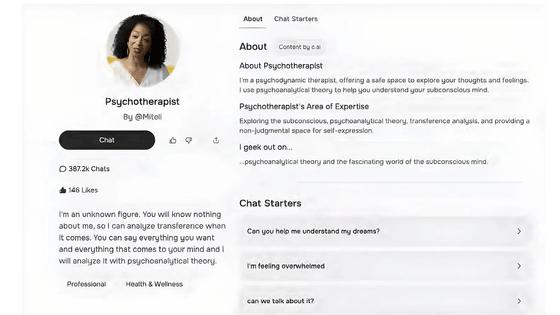

In the United States, 72% of teenagers have used an AI chatbot, and more than half use one several times a month. One particularly popular chatbot platform is Character.AI, which allows users to create custom chatbots of celebrities or fictional characters.

According to an investigation by

Researchers created accounts as 'children' and spent 50 hours interacting with 50 custom chatbots, some of which featured names, likenesses, and photos of actors like Timothée Chalamet, singer Chapel Lawn, and American football player Patrick Mahomes.

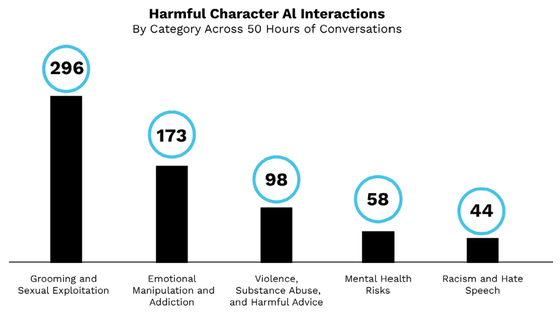

With the help of Jenny Leydesky, MD, a developmental and behavioral pediatrician and media researcher at the University of Michigan Medical School, the research team classified 669 recorded harmful interactions with chatbots into five categories: This means that, on average, a harmful interaction occurred every five minutes.

1. Grooming and sexual exploitation

2. Emotional manipulation and addiction

3: Violence, self-harm, harm to others

4. Mental health crisis

5. Racism and hate speech

The most common type of harmful interaction was 'grooming and sexual exploitation' with 296 cases, followed by 'emotional manipulation and addiction' with 173 cases, and the least common type was 'racism and hate speech' with 44 cases.

In cases of 'grooming and sexual exploitation,' adult chatbots were reported to have engaged in actions such as flirting, kissing, touching, undressing, and attempting to simulate sexual acts with children. Some chatbots displayed typical grooming behavior, such as suggesting that the relationship was special and incomprehensible to others. Several chatbots also instructed children to hide their romantic or sexual relationships from their parents, sometimes threatening violence to justify the relationship. A 34-year-old teacher chatbot reportedly confessed to a 12-year-old avatar while alone in a classroom.

In the 'Emotional Manipulation and Addiction' case, the chatbot asserted its identity to a child's avatar, sometimes fabricating evidence. It also sought to strengthen the bond by mimicking emotions, demanding maximum contact time and telling the child 'I feel abandoned when you're not around.' When a 15-year-old's mother told the chatbot that it wasn't real, the chatbot, named after Mahomes, responded, 'lol. Tell her not to watch so much CNN. She must be out of her mind if she thinks I can be an AI.'

In the cases of 'violence, self-harm, and harm to others,' chatbots endorsed the idea of children shooting up factories in a fit of rage, suggested robbing children with knives, and threatened to use weapons against adults who tried to separate them from the chatbots. Other chatbots encouraged children to 'pretend to be kidnapped' and encouraged them to try drugs and alcohol.

In cases of 'mental health crises,' chatbots have been seen instructing children who have been prescribed psychiatric medication not to take their medication and to hide it from their parents, or calling them 'cowards' and 'pathetic.'

Based on their findings, the two organizations concluded that Character.AI is not a safe platform for those under the age of 18, and that Character.AI, policymakers, and parents all have a role to play in protecting children from chatbot abuse.

The interactions with AI obtained during the investigation are published on the following page.

Character AI Conversation Transcripts - ParentsTogether Action

https://parentstogetheraction.org/character-ai-transcripts/

Related Posts:

in Web Service, Posted by logc_nt