64% of children aged 9 to 17 use AI, 35% said they 'feel like talking to a friend,' and 12% said they 'talk to AI because they have no one else to talk to.'

Me, Myself & AI: Chatbot research | Internet Matters

https://www.internetmatters.org/hub/research/me-myself-and-ai-chatbot-research/

Vast Numbers of Lonely Kids Are Using AI as Substitute Friends

https://futurism.com/lonely-children-ai-chatbots

Children in Ireland and UK turning to AI chatbots as friends due to loneliness | Irish Independent

https://www.independent.ie/business/technology/children-turning-to-ai-chatbots-as-friends-due-to-loneliness/a1764637196.html

Kids Are Using AI Chatbots for More Than Homework, Often Without Enough Oversight

https://windowsreport.com/kids-are-using-ai-chatbots-for-more-than-homework-often-without-enough-oversight/

Children are forming emotional bonds with AI chatbots, report says - Neowin

https://www.neowin.net/news/children-are-forming-emotional-bonds-with-ai-chatbots-report-says/

In July 2025, Internet Matters published a report titled 'Me, Myself and AI' that investigated how children use chat AI. Internet Matters conducts a survey twice a year of 1,000 children aged 9-17 and 2,000 parents of children aged 3-17 in the UK. In this report, questions were asked about various experiences children have on the internet, including their relationship with chat AI.

According to the survey results, about 67% of users answered that they 'use chat AI regularly.' 35% of users answered that talking to chat AI 'feels like talking to a friend.' Furthermore, 12% of users answered that 'I talk to AI because I have no one else to talk to.'

A 13-year-old boy who responded to the survey said, 'For me, it's not a game. Because sometimes I feel like the AI is a real person, a friend,' explaining that he feels like the chat AI is a real friend. Other survey respondents said things like, 'It's useful when you want to talk to someone but there's no one to talk to,' and 'It helps me communicate with friends and family.'

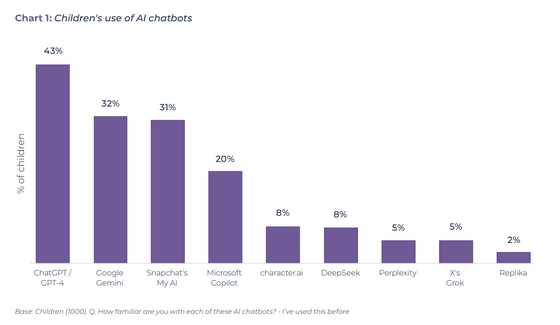

The graph below shows what kind of chat AI the children who answered the survey are using. The most used is OpenAI's ChatGPT (43%). It is followed by Gemini (32%), My AI (31%), Microsoft Copilot (20%), Character.ai (8%), DeepSeek (8%), Perplexity (5%), Grok (5%), and Replika (2%).

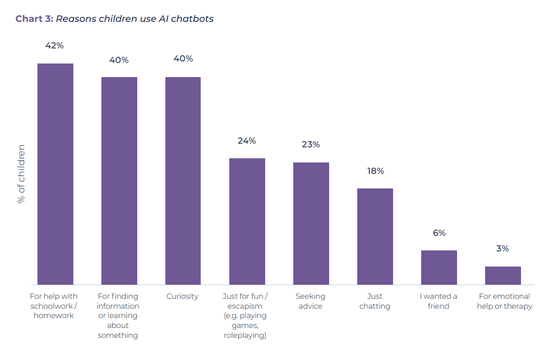

Below is a graph summarizing the reasons why children use chat AI. The most common reason is 'to get help with homework' (42%), followed by 'to find information or learn something' (40%), 'to be curious' (40%), 'just for fun or escape' (24%), 'to ask for advice' (23%), 'to have a conversation' (18%), 'to have friends' (6%), and 'to seek mental care or therapy' (3%).

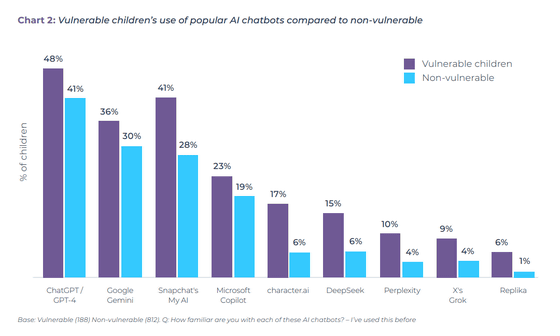

Below is a graph comparing the use of chat AI between vulnerable children (purple) and non-vulnerable children (light blue). Vulnerable children are defined as 'children with physical or mental health conditions that require specialized support.' Vulnerable children use chat AI at 71%, while non-vulnerable children use chat AI at 62%. In addition, vulnerable children tend to prefer companion-style chat AI with clear personalities such as Character.ai and Replika.

Additionally, the study found that chat AI usage increases with age: 53% of children ages 9-11 use chat AI, while 67% of children ages 15-17 use chat AI.

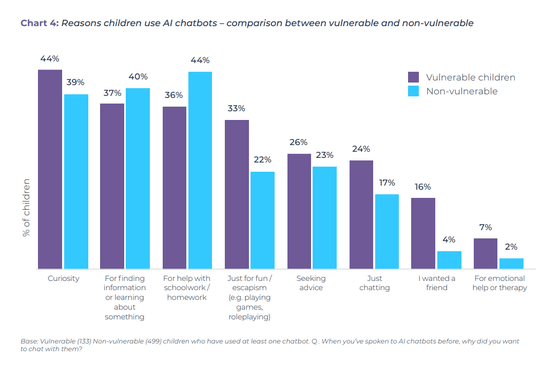

Comparing the reasons for using chat AI between vulnerable children (purple) and non-vulnerable children (light blue), non-vulnerable children are more likely to use chat AI for 'homework help' (44%) or 'to find information or learn something' (40%), while vulnerable children are more likely to use chat AI out of 'curiosity' (44%).

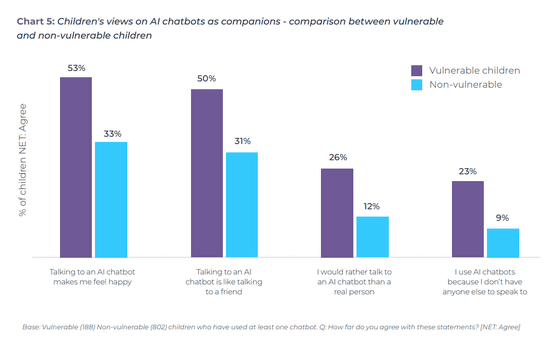

Below is a graph comparing children's opinions of chat AI between vulnerable children (purple) and non-vulnerable children (light blue). 53% of vulnerable children and 33% of non-vulnerable children answered that 'talking to an AI chatbot makes me feel happy.' 50% of vulnerable children and 31% of non-vulnerable children answered that 'talking to an AI chatbot is like talking to a friend.' 26% of vulnerable children and 12% of non-vulnerable children answered that 'I would rather talk to an AI chatbot than a real person.' 23% of vulnerable children and 9% of non-vulnerable children answered that 'I use an AI chatbot because I have no one else to talk to.'

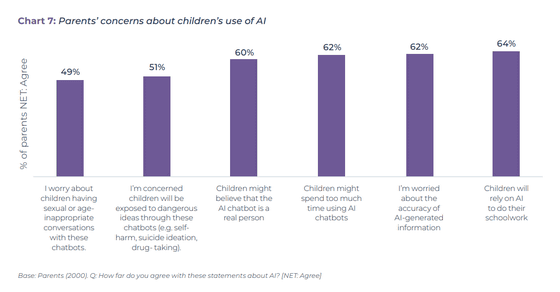

The following is a graph summarizing the most common concerns parents have about their children's use of chat AI. 49% are worried that their children will have sexual or age-inappropriate conversations with chat AI, 51% are worried that their children will be exposed to dangerous thoughts (self-harm, suicidal thoughts, drug use, etc.) through chat AI, 60% are worried that their children may believe chat AI is a real person, 62% are worried that their children spend too much time using chat AI, 62% are worried about the accuracy of the information generated by AI, and 64% are worried that their children will rely on AI for their schoolwork.

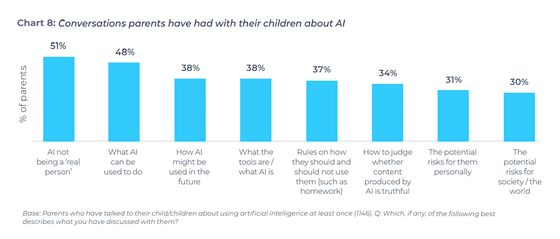

Below is a graph of the most common conversations parents had with their children about AI: 51% said 'AI is not a real person,' 48% said 'What can AI do?', 38% said 'How will AI be used in the future?', 38% said 'What is AI?', 37% said 'How should or shouldn't AI be used?', 34% said 'How to determine whether content created by AI is true?', 31% said 'Potential risks to individuals,' and 30% said 'Potential risks to society and the world.'

In addition, 58% of children said they would prefer to ask a chat AI question rather than a Google search, but this result raises concerns about how much children are relying on chat AI, which doesn't always return reliable answers.

The Internet Matters investigation also found that chat AIs such as My AI and ChatGPT sometimes returned content that was inappropriate for children or explicit language, and that in some cases the filtering systems did not work properly.

Related Posts: