AMD unveils proprietary inference model 'Instella-Math'

AMD has announced ' Instella-Math ,' a language model trained exclusively on AMD GPUs. It has 3 billion parameters and is specialized for inference and mathematical problem solving.

Introducing Instella-Math: Fully Open Language Model with Reasoning Capability — ROCm Blogs

Instella-Math was trained using 32 AMD Instinct MI300X GPUs, and according to AMD, this is the first language model to use long chain-of-thought reinforcement learning exclusively with AMD GPUs.

Instella-Math is a fully open inference language model, with its architecture, training code, weights, and datasets

AMD conducted a two-stage supervised fine-tuning process to gradually improve the inference capabilities of the Instella-3B-Instruct model. The first stage involved tuning to enhance coverage of mathematical abilities, and the second stage gave the model the ability to generate inference steps to address Mathematics Olympiad-level problems.

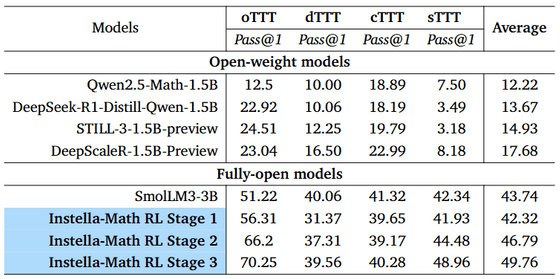

As a result of this training, Instella-Math performed comparable to open weight models such as Deepseek-R1-Distilled-Qwen-1.5B, Still-3-1.5B, DeepScaleR-1.5B, and SmolLM3-3B. AMD stated, 'Not only did this achieve competitive average performance across all benchmarks, it also demonstrated the effectiveness of our training methodology.'

AMD said, 'While many competing models are limited to open weights and the base model training and inference distillation process remain private, Instella-Math is a fully open language model, and the base model, inference SFT, and training data for the reinforcement learning stage are all open. To our knowledge, Instella-Math is the first fully open mathematical inference model trained on AMD's GPUs. As part of AMD's commitment to open innovation, we share model weights, training settings, code bases, and datasets to promote collaboration, transparency, and progress in the AI community.'

Various data is published on Hugging Face.

amd/Instella-3B-Instruct · Hugging Face

https://huggingface.co/amd/Instella-3B-Instruct

Related Posts:

in Software, Posted by log1p_kr