DeepSeek-R1 developers open-source their proprietary technologies one after another, enabling faster AI learning and inference

DeepSeek, a China-based AI development company, attracted a lot of attention when it announced the low-cost, high-performance AI model ' DeepSeek-R1 ' in January 2025. DeepSeek is open-sourcing several technologies related to AI development every day.

🚀 Day 0: Warming up for #OpenSourceWeek !

— DeepSeek (@deepseek_ai) February 21, 2025

We're a tiny team @deepseek_ai exploring AGI. Starting next week, we'll be open-sourcing 5 repos, sharing our small but sincere progress with full transparency.

These humble building blocks in our online service have been documented,…

On February 21, 2025, DeepSeek declared that it would open source various technologies, stating, 'We are a small team working to realize AGI (artificial general intelligence). Starting next week, we will open source five repositories.' 'As part of the open source community, we believe that every piece of code we share will accelerate our journey (toward the realization of AGI).' The first repository was released on February 24, 2025, and one repository has been released each day since then.

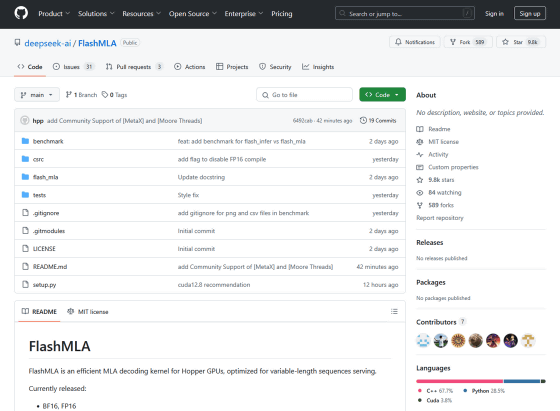

◆Day 1: FlashMLA

The technology that was open-sourced as the first in a series of projects on Monday, February 24, 2025 is ' FlashMLA '. FlashMLA is an MLA decoding kernel developed for NVIDIA's Hopper architecture -based GPUs, which can speed up the processing of variable-length sequences.

🚀 Day 1 of #OpenSourceWeek : FlashMLA

— DeepSeek (@deepseek_ai) February 24, 2025

Honored to share FlashMLA - our efficient MLA decoding kernel for Hopper GPUs, optimized for variable-length sequences and now in production.

✅ BF16 support

✅ Paged KV cache (block size 64)

⚡ 3000 GB/s memory-bound & 580 TFLOPS…

The FlashMLA repository is available at the following link:

GitHub - deepseek-ai/FlashMLA

https://github.com/deepseek-ai/FlashMLA

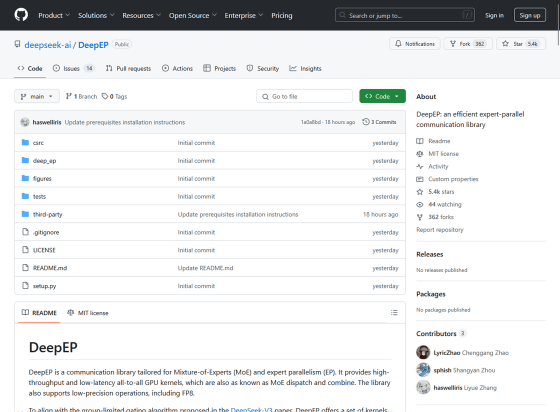

◆Day 2: Deep EP

On Tuesday, February 25, 2025, DeepEP , a communication library that can speed up the training and inference of Mixture of Experts (MoE) models, was released. MoE is an architecture that uses multiple expert models as submodels and combines the outputs of each submodel to output the final result, and DeepEP is said to contribute to speeding up communication between submodels.

🚀 Day 2 of #OpenSourceWeek : DeepEP

— DeepSeek (@deepseek_ai) February 25, 2025

Excited to introduce DeepEP - the first open-source EP communication library for MoE model training and inference.

✅ Efficient and optimized all-to-all communication

✅ Both intranode and internode support with NVLink and RDMA

✅…

For more information on DeepEP, please see the links below.

GitHub - deepseek-ai/DeepEP: DeepEP: an efficient expert-parallel communication library

https://github.com/deepseek-ai/DeepEP

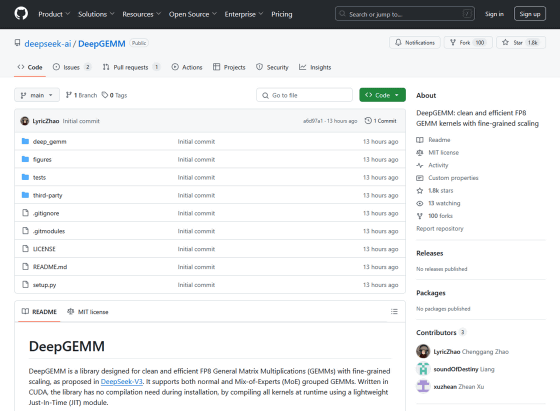

◆Day 3: DeepGEMM

In AI-related computational processes, a process called ' matrix multiplication (GEMM) ' is frequently performed. The technology that can speed up this GEMM is ' DeepGEMM ', which was open-sourced on Wednesday, February 26, 2025.

🚀 Day 3 of #OpenSourceWeek : DeepGEMM

— DeepSeek (@deepseek_ai) February 26, 2025

Introducing DeepGEMM - an FP8 GEMM library that supports both dense and MoE GEMMs, powering V3/R1 training and inference.

⚡ Up to 1350+ FP8 TFLOPS on Hopper GPUs

✅ No heavy dependencies, as clean as a tutorial

✅ Fully Just-In-Time compiled…

The source code for DeepGEMM is available at the following link.

GitHub - deepseek-ai/DeepGEMM: DeepGEMM: clean and efficient FP8 GEMM kernels with fine-grained scaling

https://github.com/deepseek-ai/DeepGEMM

Continued

DeepSeek announces open-source '3FS', a file system that accelerates AI - GIGAZINE

in Software, Posted by log1o_hf