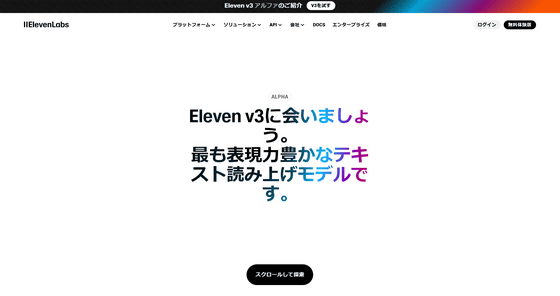

When the text-to-speech model 'Eleven v3' that also supports Japanese actually converts GIGAZINE articles into audio, it becomes like this

Eleven v3(alpha), the alpha version of the text-to-speech model 'Eleven' developed by AI company ElevenLabs, was released on June 6, 2025. Eleven v3(alpha) enables the long-awaited Japanese reading capability, and also enables 'emotions' and 'dialogue' that were not available in previous models.

Eleven Labs announces 'Eleven v3 (alpha)', a TTS model with unprecedented expressiveness | Press release from Eleven Labs Japan LLC

The evolution points of Eleven v3 are as follows. The public API for Eleven v3 (Alpha) will be released soon.

Supports over 70 languages : Expanded from 33 languages to over 70 languages, expanding global population coverage from 60% to 90%. Supports global content production in addition to Japanese and English.

Dialogue mode : Natural speaker switching during conversation.

Voice tag support : Add tone of voice, such as 'whisper,' 'laugh,' or 'sarcastic tone,' or sound effect, such as 'crowd cheering' or 'door creaking,' to the text to make the reading more lifelike.

Wide range of emotions : Changes in mood and pace are possible within a single sentence.

Available to all users : Available to a wide range of creators and businesses.

Streaming Support (coming soon): Coming soon for call centers and real-time conversational agents.

You can try Eleven v3 (alpha) for free from the link below.

Eleven v3 (alpha) — The most expressive text-to-speech model

https://elevenlabs.io/en/v3

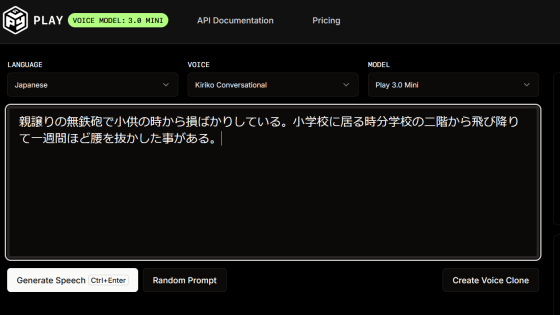

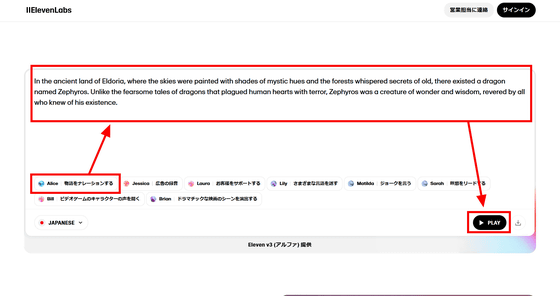

If you scroll down the page above, you will see an input field where you can test Eleven v3's reading. Select a reader voice, enter the text you want to read in the input field, and click 'PLAY' to play the generated reading voice. Note that after generating the free trial several times, you will need to register as an ElevenLabs user.

This time, we entered a part of

I had the Japanese-compatible text-to-speech model 'Eleven v3 (alpha)' read out an article by GIGAZINE - YouTube

Free users can select a voice model specialized for Japanese called 'Ishibashi - Strong Japanese Male Voice'. When you have it read, it sounds more natural than 'Alice'. It sounds like 'an ordinary man, not a voice actor or narrator, is reading it out loud', and there is almost no sense of incongruity in the intonation or tempo.

'Eleven v3 (alpha)' has a voice model specialized for Japanese read out an article by GIGAZINE - YouTube

According to ElevenLabs, Eleven v3 not only reads Japanese accurately, but also can read emotions from text and express a wide range of emotions, and can automatically convert the speech into a natural Kansai intonation when you specify 'Kansai dialect.' Furthermore, by inputting something like 'football broadcast style,' Eleven can read in a realistic voice with cheers in the background.

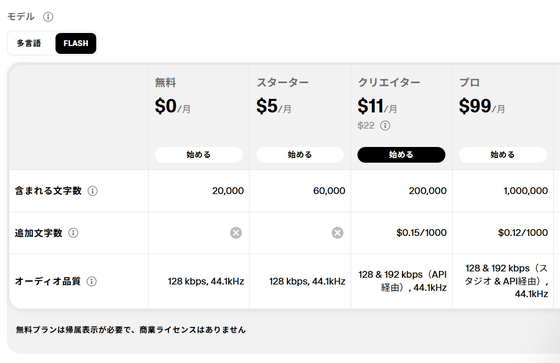

In addition, ElevenLabs' free plan allows you to generate up to 20,000 characters per month, with a maximum of 2,500 characters per generation. However, commercial use is not permitted and attribution is required. Paid plans include a starter plan for $5 (approx. 720 yen) per month, a creator plan for $22 (approx. 3,000 yen) per month, and a pro plan for $99 (approx. 14,000 yen) per month.

Related Posts:

in Review, Software, Web Service, Video, Posted by log1i_yk