Mistral AI Announces 'Devstral,' a Small, High-Performance Agent-Based Coding AI That Runs Locally

French AI startup Mistral AI and fellow AI startup All Hands AI have jointly released Devstral , an agent-based open language model built for software development. It is lightweight enough to run locally on a PC and is said to have performance comparable to GPT-4.1-mini and Claude 3.5 Haiku.

Devstral | Mistral AI

Devstral: A new state-of-the-art open model for coding agents

https://www.all-hands.dev/blog/devstral-a-new-state-of-the-art-open-model-for-coding-agents

mistralai/Devstral-Small-2505 · Hugging Face

https://huggingface.co/mistralai/Devstral-Small-2505

Devstral is an agent-based large-scale language model (LLM) based on Mistral Small 3.1 . Unlike traditional LLMs that are good at basic coding tasks such as creating single functions and code completion, Devstral is designed specifically to solve real-world software engineering problems.

According to Mistral AI, Devstral can perform advanced tasks required in real-world development, such as contextualizing code within large codebases, identifying relationships between different components, and identifying subtle bugs within complex functions.

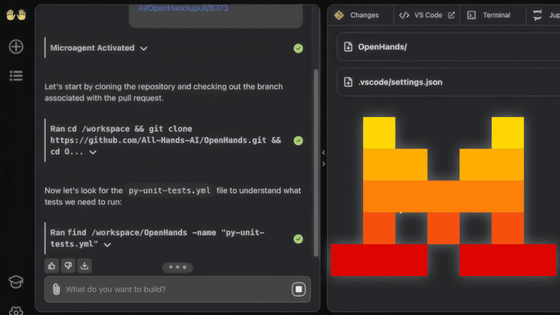

Devstral's feature is that it has a parameter size of only 23.6 billion, is lightweight enough to run on a PC with an RTX 4090 or a Mac with 32GB of RAM, and is intended for local deployment and on-device use. By combining it with coding platforms such as OpenHands provided by All Hands AI, it is possible to interact with the local code base and provide rapid resolution of problems.

All Hands AI has released a video explaining the steps to actually run Devstral on OpenHands below.

OpenHands + Devstral = A Fully Local Coding Agent - YouTube

The relatively affordable ability of Devstral to run locally means that companies can process code within their own infrastructure, completely eliminating the risk of information leaks. 'For companies that need to meet stringent compliance requirements, Devstral is an ideal solution,' Mistral AI said.

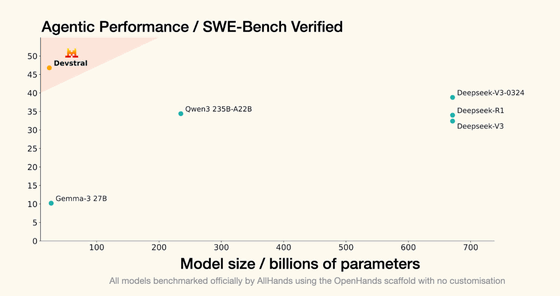

Devstral is trained to solve real GitHub issues and has been evaluated on the SWE-Bench Verified dataset of 500 issues that have been manually verified for accuracy. According to Mistral AI, Devstral achieved a score of 46.8% on the SWE-Bench Verified benchmark, beating the best-performing open source model to date by more than 6%.

Mistral AI conducted comparative benchmarks on the same platform, OpenHands, provided by All Hands AI, and found that Devstral outperformed Deepseek-V3-0324 and Qwen3 232B-A22B, which are much larger models with 67.1 billion parameters.

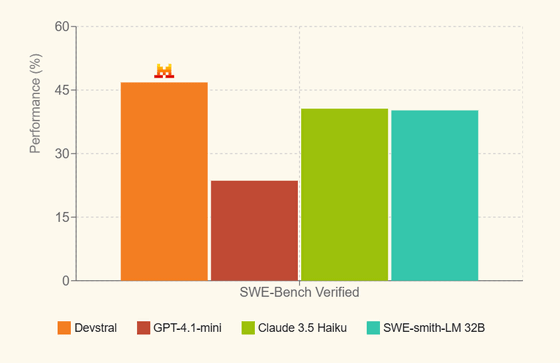

In addition, in a comparison with closed-source models, Devstral outperformed OpenAI's GPT-4.1-mini by more than 20 percentage points, and achieved a performance of about 40% or so, equivalent to Claude 3.5 Haiku and SWE-smith-LM 32B. In this comparison, various evaluation environments, including custom scaffolds optimized for each model, were used, and Mistral AI emphasized that Devstral can maintain stable high performance under various conditions.

Devstral is an open model, freely available under the Apache 2.0 license, and can be downloaded from Hugging Face , Ollama , Kaggle , and Unsloth . In addition, an API is provided on the Mistral AI platform under the name 'devstral-small-2505', and a pay-as-you-go system is adopted, with $0.1 (approximately 14 yen) per million input tokens and $0.3 (approximately 43 yen) per million output tokens. In addition, advanced services such as fine tuning and pre-learning are also provided for companies, and the price is negotiable.

Related Posts:

in Software, Web Service, Video, Posted by log1i_yk