Three bugs caused the intermittent degradation of AI 'Claude' response quality

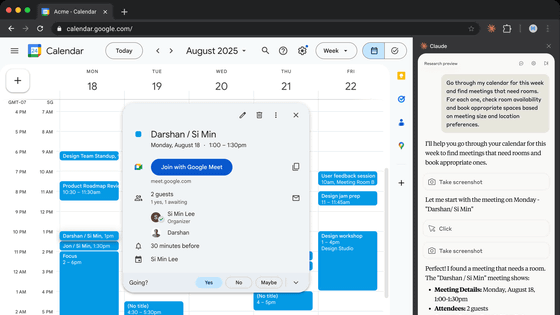

Between early August and early September 2025, Anthropic received multiple reports of a decline in the response quality of its AI, Claude. Anthropic investigated the issue and discovered that three infrastructure bugs were the cause.

A postmortem of three recent issues \ Anthropic

The first bug was a routing error, a data transfer error to the server handling the context window. On August 5, 2025, a request for the 'Sonnet 4' model, which at the time could only handle a context window of 200,000 tokens, was mistakenly forwarded to a server configured for a context window of 1 million tokens. At this point, 0.8% of user requests were affected, including performance degradation.

On August 29th, a periodic load balancing process caused a bug in which short context requests were inadvertently forwarded to a server designed for 1 million tokens. At the peak of the problem on August 31st, 16% of Sonnet 4 requests were affected. Approximately 30% of users who made requests during this series of issues are believed to have experienced slower response times as at least one message was forwarded to the wrong server.

Additionally, once a request was processed by the wrong server, subsequent requests were likely to be processed by the same wrong server, which could mean that the same user experienced the issue multiple times.

Anthropic fixed their routing logic to ensure that short and long context requests are routed to the correct server pools. The fix was deployed on September 4th.

The second bug was caused by an incorrect configuration deployed to the Claude API TPU server on August 25. The misconfiguration caused an error during token generation, resulting in the generation of Thai and Chinese characters in English prompts and other characters that should not be generated in the context.

This issue affected requests made for Opus 4.1 and Opus 4 between August 25th and 28th, and for Sonnet 4 between August 25th and September 2nd.

Anthropic identified the issue on September 2nd and rolled back the changes, and added a detection test to prevent unexpected characters from being output.

The third issue occurred when a bug in the machine learning compiler 'XLA' was unintentionally triggered as a result of the deployment of code to improve token selection during text generation on August 25. This caused Claude to unintentionally exclude the most probable token when generating text.

This is known to affect Claude Haiku 3.5 and may also have affected parts of Sonnet 4 and Opus 3 on the Claude API.

Anthropic confirmed the impact on Haiku 3.5 and rolled it back on September 4. They then received reports of issues matching the bug in Opus 3, and rolled it back again on September 12. They were unable to reproduce the issue in Sonnet 4, but decided to roll it back out of caution.

Anthropic points out that one of the reasons these bugs were difficult to detect was due to the company's privacy practices, which limited how engineers could see into user interactions with Claude, particularly interactions that hadn't been reported as feedback.

Additionally, because Claude was spread across multiple hardware platforms, the infrastructure was complex and confusing, with some users experiencing issues while others did not.

As a future measure, Anthropic reports that it has developed evaluation methods that can more accurately distinguish between normal and faulty behavior, as well as infrastructure and tools for efficient debugging without compromising user privacy.

Anthropic said, 'We know our users expect consistent quality from Claude, and we maintain extremely high standards to ensure that infrastructure changes do not impact model output. In this incident, we fell short of those standards. We appreciate our users continuing to provide us with direct feedback.'

Related Posts:

in Software, Posted by log1p_kr