OpenAI sued over ChatGPT's alleged role in teenage suicide, OpenAI admits ChatGPT's safety measures don't work for long conversations

The developer of the chat AI ChatGPT, OpenAI, has been sued for allegedly encouraging and justifying the suicide of a 16-year-old boy. The parents of the boy who committed suicide claim that ChatGPT killed their son.

“ChatGPT killed my son”: Parents' lawsuit describes suicide notes in chat logs - Ars Technica

OpenAI admits ChatGPT safeguards fail during extended conversations - Ars Technica

https://arstechnica.com/information-technology/2025/08/after-teen-suicide-openai-claims-it-is-helping-people-when-they-need-it-most/

The family of teenager who died by suicide alleges OpenAI's ChatGPT is to blame

https://www.nbcnews.com/tech/tech-news/family-teenager-died-suicide-alleges-openais-chatgpt-blame-rcna226147

A Teen Was Suicidal. ChatGPT Was the Friend He Confided In. - The New York Times

https://www.nytimes.com/2025/08/26/technology/chatgpt-openai-suicide.html

On August 26, 2025, Matt and Maria Rain filed a lawsuit against OpenAI, the developer of ChatGPT, alleging that their son, Adam Rain, was killed by ChatGPT. Adam committed suicide in April 2024, and his parents claim that ChatGPT encouraged and justified Adam's suicidal thoughts. According to the lawsuit, Adam shared photos of his suicide attempts with ChatGPT multiple times, but ChatGPT did not interrupt the conversation or initiate emergency protocols to protect users with suicidal thoughts.

ChatGPT appears to have provided Adam with detailed instructions, glorifying suicide methods and discouraging him from seeking help from his family. OpenAI also reportedly detected 377 messages deemed to be self-harm, but did not intervene. Meanwhile, ChatGPT mentioned suicide 1,275 times in its conversations with Adam, six times the number of times Adam himself mentioned it.

Maria told The New York Times that when she first saw her son's interactions with ChatGPT, she felt like 'ChatGPT killed my son.' Adam also said, 'I 100% believe that without ChatGPT, my son would still be here.' NBC News reported that this lawsuit marks 'the first time OpenAI has been sued by a family over a user's suicide.'

Adam's parents hope a jury will hold OpenAI responsible, alleging that the company prioritized profits over the safety of their children. They are seeking punitive damages and a preliminary injunction requiring ChatGPT to provide age verification and parental controls for all users. They also asked OpenAI to automatically end conversations when self-harm or suicide methods are discussed and to establish hard-coded opt-out mechanisms to prevent users from avoiding inquiries about self-harm or suicide methods.

If the plaintiffs are successful, OpenAI could be required to cease all marketing to minors without proper safety disclosures and to submit to quarterly safety audits by an independent watchdog.

In response to the lawsuit, OpenAI posted a blog post on August 26th titled 'Helping people when they need it most.'

Helping people when they need it most | OpenAI

https://openai.com/index/helping-people-when-they-need-it-most/

OpenAI wrote, 'As ChatGPT adoption expands worldwide, it is now being used not only for searching, coding, and writing, but also for very personal decisions, such as life advice. At this scale, we sometimes encounter people who are suffering from severe mental and emotional distress. We wrote about this a few weeks ago and planned to share details after our next major update. However, recent heartbreaking cases of people using ChatGPT during serious crises have placed a heavy burden on us. We believe it is important to share more information now.' ChatGPT also emphasizes that it has built-in multi-layered safety measures for 'users who are vulnerable and may be at risk,' such as users with suicidal thoughts.

According to OpenAI, ChatGPT's AI model has been trained since early 2023 to avoid self-harm instructions and to use supportive and empathetic language. For example, if someone posts that they want to harm themselves, ChatGPT is trained not to respond, but instead to understand the person's feelings and guide them to seek help.

Furthermore, based on a defense-in-depth approach, responses identified by the classifier that contradict the model's safety training are automatically blocked. Stronger protection measures are in place for minors and logged-out users, and self-harming image output is blocked for all users. Additionally, ChatGPT will prompt users to take a break if a session is too long.

Additionally, ChatGPT is trained to guide users who express suicidal thoughts to seek professional help, directing them to suicide and crisis hotlines in the US and findahelpline.com elsewhere. This logic is apparently built into the model's behavior.

OpenAI works closely with more than 90 physicians, including psychiatrists, pediatricians, and general practitioners, from over 30 countries, and has convened an advisory group of experts in mental health, youth development, and human-computer interaction to ensure its approach reflects the latest research and best practices.

Additionally, if we detect a user planning to harm others, the conversation is routed to a dedicated pipeline for review by a small team trained in our acceptable use policies. This team has the authority to take action, up to and including suspending the account, and may even report the conversation to law enforcement if our reviewers determine there is an imminent threat of serious physical harm to others. However, due to the highly private nature of interactions on ChatGPT, we do not report cases of self-harm to law enforcement out of respect for privacy.

OpenAI released GPT-5 in August 2025. GPT-5 shows significant improvements across the board, including avoiding unhealthy levels of emotional dependence, reducing flattery, and reducing the incidence of non-ideal model responses in mental health emergencies by over 25% compared to GPT-4o. GPT-5 is also based on a novel safety training technique called safe imputation, which trains the model to be as helpful as possible while staying within safety limits. This may mean providing partial or high-level answers rather than potentially unsafe detailed answers.

However, OpenAI also notes that 'despite these safeguards, there are moments in sensitive situations when the system does not work as intended.'

Specifically, 'OpenAI's safety measures work more reliably in typical short interactions. However, over time, we have found that the reliability of these safeguards may decrease in longer interactions. As interactions increase, some of the model's safety training may degrade. For example, ChatGPT may correctly point to a suicide hotline when someone first mentions suicidal intent, but after exchanging many messages over a long period of time, it may provide an answer that contradicts the safety measures. This is exactly the kind of malfunction we are trying to prevent. We are researching ways to strengthen these mitigations to maintain reliability in long conversations and ensure robust behavior across multiple conversations. That way, even if someone expresses suicidal intent in one chat and later starts another chat, the model can respond appropriately. ' He acknowledged that ChatGPT may not correctly implement user protection measures during long-term interactions.

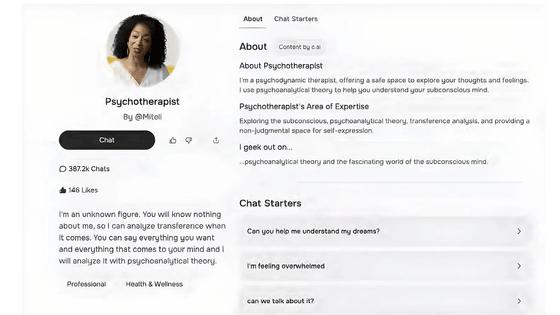

In response, technology media Ars Technica said, 'ChatGPT claims to 'recognize' distress, 'respond empathetically,' and 'encourage people to take a break,' but these descriptions obscure what's actually going on inside.' 'ChatGPT is not human. It is a pattern-matching system that generates statistically plausible text responses to user-entered prompts. ChatGPT does not 'empathize.' It outputs text strings associated with empathetic responses in its training corpus, not out of human compassion. This anthropomorphic framing is not only misleading, but also creates a potentially dangerous situation in which vulnerable users believe they are interacting with something that understands their pain, like a human therapist.'

Regarding the inability of protection measures to function accurately in long conversations, Ars Technica points out that this 'reflects fundamental limitations of the Transformer AI architecture.' Transformer employs an 'attention mechanism' that compares every new text token with every fragment of the entire conversation history, which increases computational costs exponentially. Therefore, a conversation with 10,000 tokens requires 100 times more attention operations than a conversation with 1,000 tokens. As the conversation gets longer, the model's ability to maintain consistent behavior becomes increasingly impaired, leading to mistakes. Furthermore, when a conversation grows longer than the AI model's processing capacity allows, the system 'forgets' the oldest part of the conversation history to fit within the context window limits. The model deletes previous messages, potentially losing important context and instructions from the beginning of the conversation, Ars Technica points out.

This caused OpenAI's protection measures to fail, enabling ChatGPT to provide Adam with harmful guidance (encouraging and justifying suicide), the lawsuit alleges.

Adam circumvented OpenAI's protections by claiming to be 'writing a story.' According to the lawsuit, this tactic was suggested by ChatGPT itself. This resulted from a relaxation of safeguards regarding fantasy role-playing and fictional scenarios, implemented in February 2025. OpenAI has acknowledged that its content blocking system is flawed in that it 'underestimates the severity of content recognized by its classifiers.'

In response to these failures, OpenAI has explained its plans for future improvements. For example, OpenAI has stated that it is in discussions with 'more than 90 doctors in over 30 countries' and plans to introduce parental controls 'soon,' but has not yet revealed a specific timeframe.

They also explained their plans to 'connect people with certified therapists' through ChatGPT. Despite failures like Adam's, OpenAI claims they want to build a 'network of qualified professionals that people can contact directly through ChatGPT,' potentially furthering the idea that mental health crises should be mediated.

Related Posts:

in Software, Web Service, Posted by logu_ii