What is the liquid cooling system used in Google's machine learning data center?

Heat dissipation is a problem when running computers for long periods of time, and the typical approach is to cool chips using air or coolant. Google's gigantic data centers use large-scale liquid cooling systems, and the company gave a talk about its liquid cooling system at

Google's Liquid Cooling at Hot Chips 2025 - by Chester Lam

https://chipsandcheese.com/p/googles-liquid-cooling-at-hot-chips

In recent years, the role of liquid cooling in data centers has become increasingly important due to the increasing power consumption and resulting heat generation of the latest chips, and data centers used for AI machine learning in particular require enormous power consumption and thorough cooling systems. According to Google, the thermal conductivity of water is about 4,000 times that of air, making liquid cooling systems an attractive solution to meet the cooling demands of the AI boom.

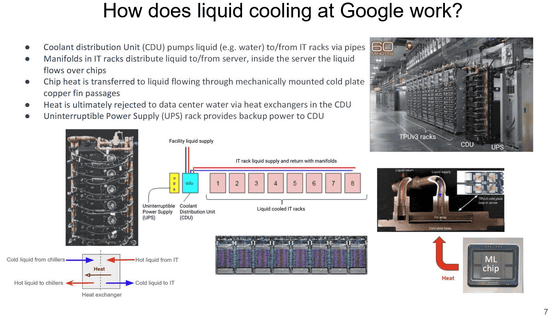

Google says that the liquid cooling system took shape in 2018 after several experiments and iterations. The current liquid cooling system is designed for data centers, with the coolant circulation pipes spread throughout the racks rather than being contained within the TPU servers. Furthermore, the entire cooling system, including the circulation of the coolant, is optimized using AI to maximize cooling efficiency.

Google's data centers use six Coolant Distribution Units (CDUs) in a single rack. The CDUs, which act as radiators and pumps, use flexible hoses and quick-disconnect couplings to facilitate maintenance and reduce tolerance requirements. Five of the six CDUs in a CDU rack are active, allowing maintenance on any one unit without downtime.

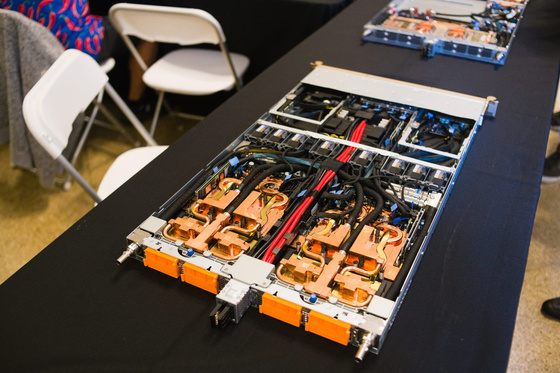

The coolant sent from the CDU is distributed to each server and flows through copper cooling plates attached to the chips inside the server. The coolant absorbs heat from these liquid plates and flows through a heat exchanger in the CDU to the discharge path for the coolant throughout the data center. The CDU exchanges heat between the coolant and the coolant throughout the facility, but the two liquids do not mix.

Because the chips are connected in series within the loop, later chips are cooled by coolant that has already passed through other chips, meaning that the cooling efficiency of later chips is reduced. Therefore, the cooling system is designed based on the requirements of the last chip in each loop.

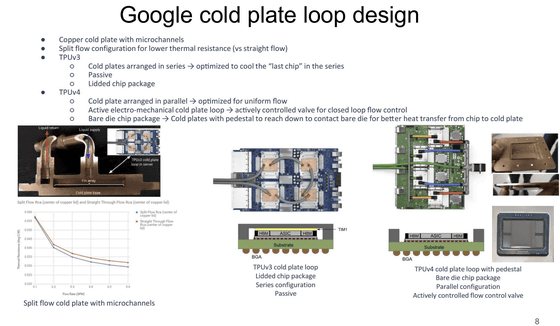

Google has also devised a new cooling plate design to further improve cooling performance. To reduce thermal resistance, the company has adopted a split-flow copper cooling plate, which offers better performance than the conventional unidirectional flow configuration. The TPU has also been improved with each generation.

In the third-generation TPU, the cooling plates were arranged in series and a lidded chip package was used. Meanwhile, the fourth-generation TPU, which consumes 1.6 times more power than the third-generation TPU, has been redesigned to have an exposed chip package for better heat transfer. Furthermore, the fourth-generation TPU also features a parallel arrangement of cooling plates to optimize uniform flow and actively controls the flow rate with an electromechanical valve.

Liquid cooling is effective not only at removing heat from the chip, but also at reducing cooling-related power requirements. According to Google, the liquid cooling pump consumes less than 5% of the power required by a fan in an air-cooled system.

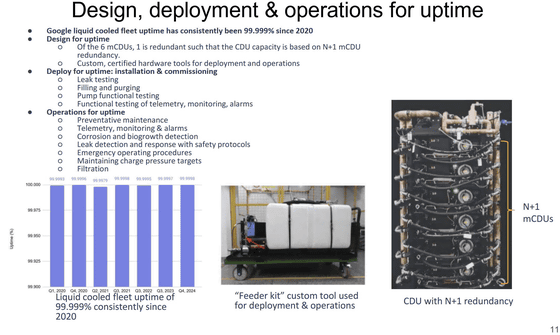

Reliability and maintainability are extremely important for such large-scale systems. Google's liquid cooling system has consistently maintained a high uptime of 99.999% since 2020. This is due to the fact that uptime is taken into consideration at each stage of design, implementation, and operation.

The design includes six CDUs for redundancy, and rigorous procedures are followed upon installation, including leak testing, filling and purging of coolant, and functional testing of pumps and remote monitoring systems.

Operationally, a wide range of measures are in place, including preventative maintenance, remote monitoring systems and alarms, corrosion and biological growth detection, leak detection and response with safety protocols, emergency operating procedures, maintaining pressure targets, and filtration.

Google said it takes preventative measures such as extensive leak testing of components, alert systems, and regular maintenance, and has clear protocols in place to ensure a large number of employees can respond in a consistent manner when a problem occurs. He emphasized that operation and maintainability must be kept in mind from the design stage of the cooling system.

Related Posts:

in Hardware, Free Member, Posted by log1i_yk