I tried out the app 'Msty,' which allows you to split the screen and chat with multiple AIs at the same time. It supports both local and API chats, making it convenient for comparing AI output.

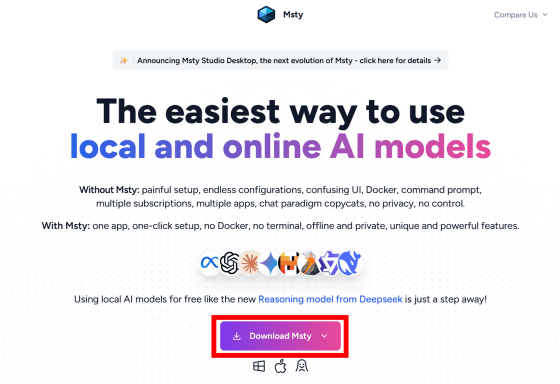

' Msty ' is a free chat AI app that allows you to download AI models and run them locally, or use AI from OpenAI, Google, and other companies via API. You can also split the screen to chat with multiple AI models simultaneously and compare their outputs. It seemed very useful, so I gave it a try.

Msty - Using AI Models made Simple and Easy

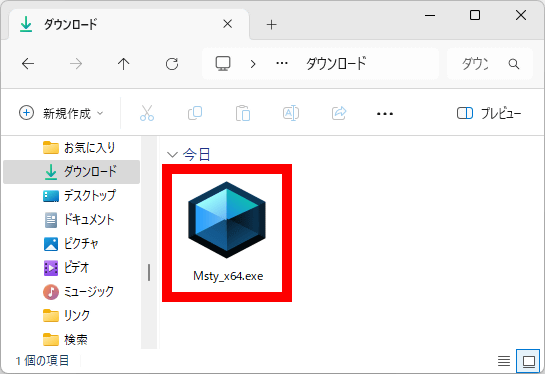

The Msty installer is available at the link above. Once you access the link, click 'Download Msty.'

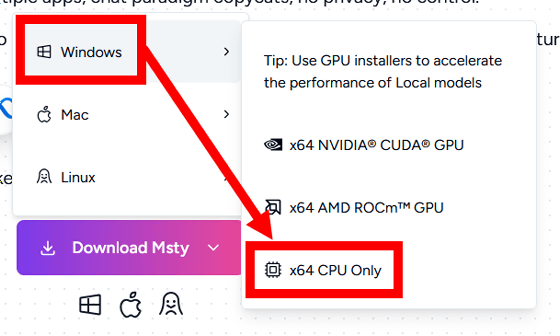

Next, select the version that matches your environment. In this example, I clicked on the Windows CPU version. If your machine is equipped with an NVIDIA or AMD GPU, simply click on the appropriate version.

Once the installer has finished downloading, double-click it to run it.

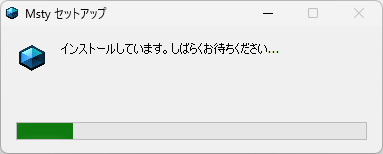

The installation will proceed automatically.

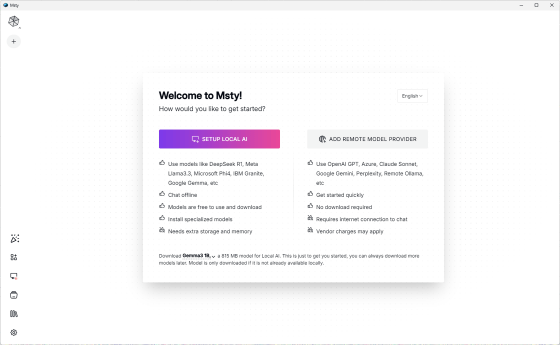

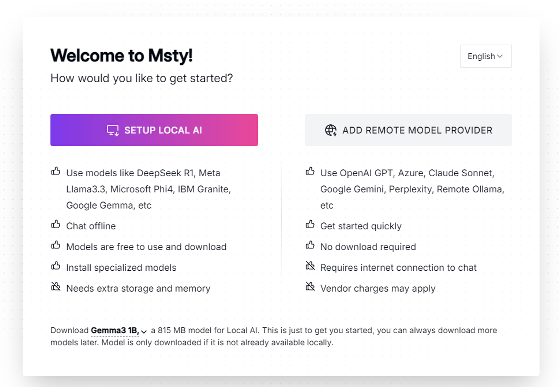

Once the installation is complete, Msty will start automatically.

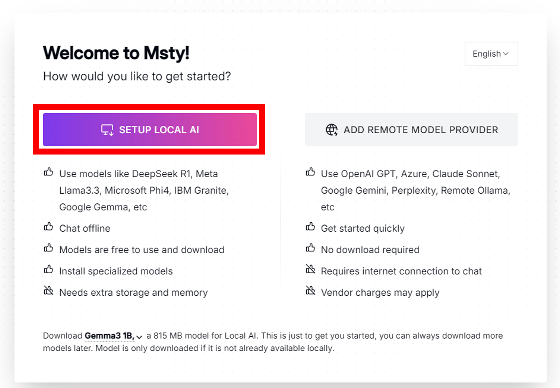

The first time you start the app, you'll be prompted to prepare an AI model. Click 'SETUP LOCAL AI' on the left to download and run an OpenAI model that runs locally. Click 'ADD REMOTE MODEL PROVIDER' on the right to run AI from providers like OpenAI and Google via API.

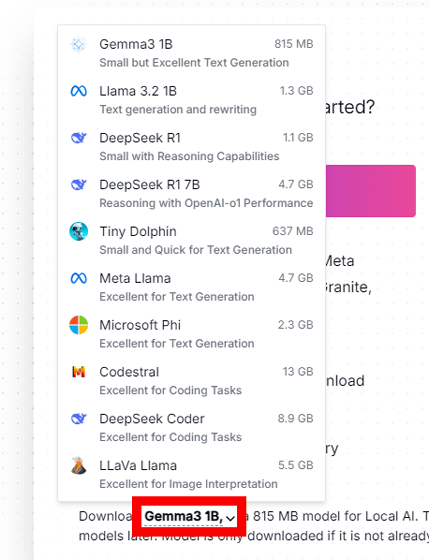

For local models, the default setting is to download 'Gemma3 1B.' Clicking on the 'Gemma3 1B' section at the bottom of the screen will allow you to select models such as 'Llama 3.2 1B' or 'DeepSeek-R1' instead.

This time, I left it as 'Gemma3 1B' and clicked 'SETUP LOCAL AI'.

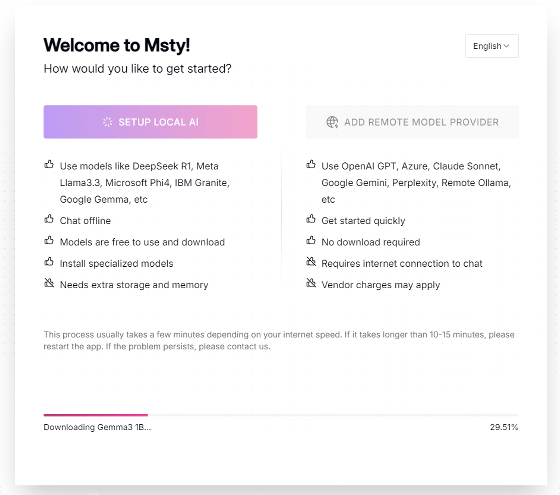

The model data will start downloading, so please wait a while.

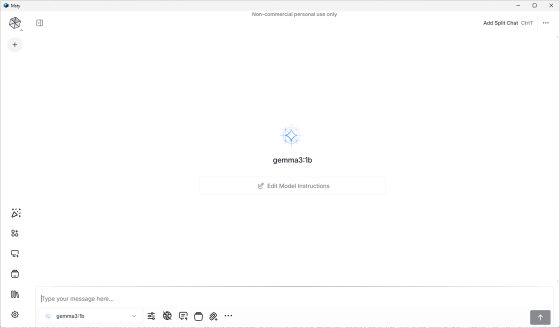

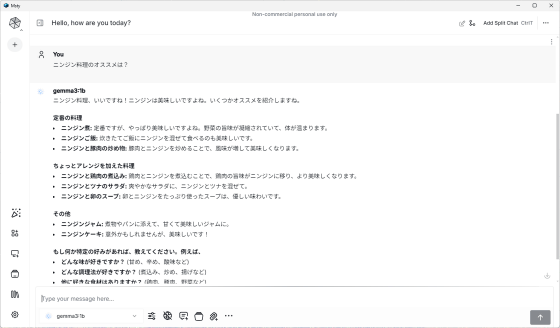

Once the download is complete, the chat screen will appear.

Like a typical chat AI, you can chat by entering text in the input field at the bottom of the screen. When I actually tried talking, the replies were displayed crisply.

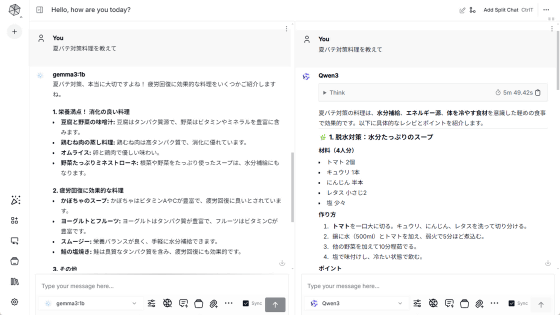

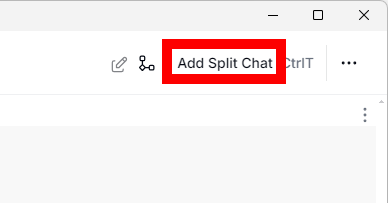

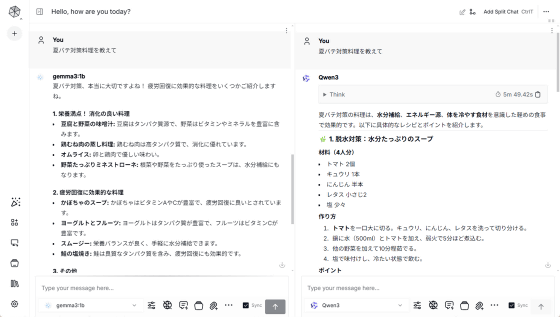

To use Msty's main feature, split-screen chat, click 'Add Split Chat' in the upper right corner of the screen.

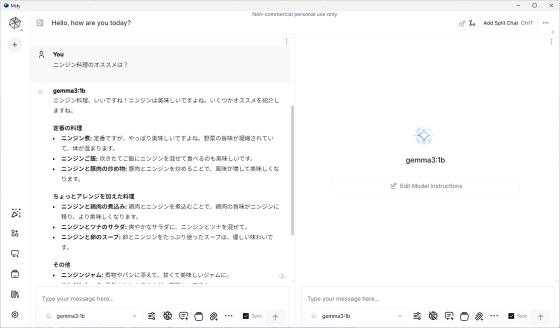

This will split the screen into left and right halves.

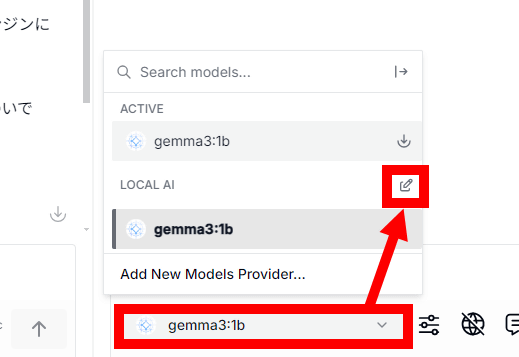

You can talk to the same AI model on both the left and right sides, but this time we'll try adding a new AI model. Click the part with the model name at the bottom of the screen, then click the button to the right of 'LOCAL AI.'

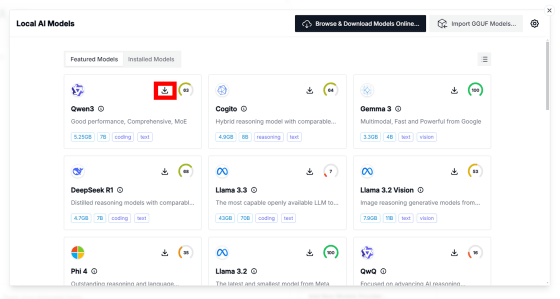

When the AI model list screen appears, click the download button for the model you want to use. In this example, I downloaded “Qwen3.”

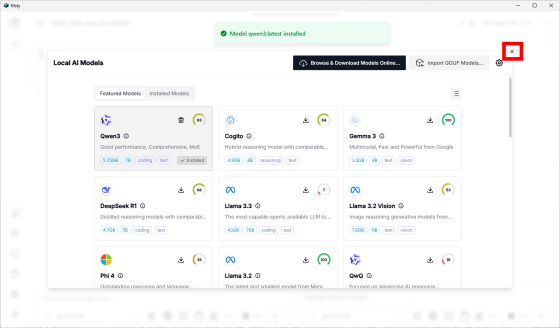

Once the download is complete, click the × button.

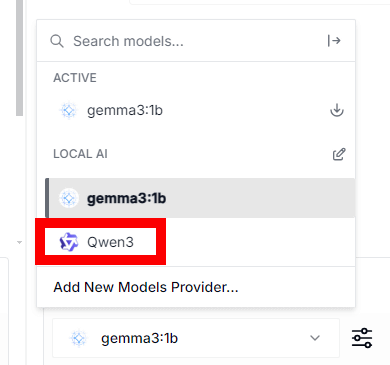

'Qwen3' has been added to the model selection screen, so click on it.

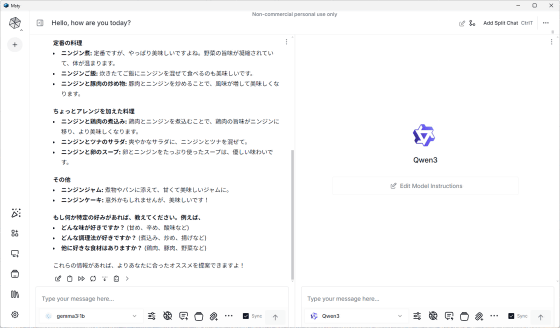

Now you can have a conversation with separate AI models on the left and right.

When you enter a prompt in either the left or right input field and submit it, both the left and right AI models will respond simultaneously.

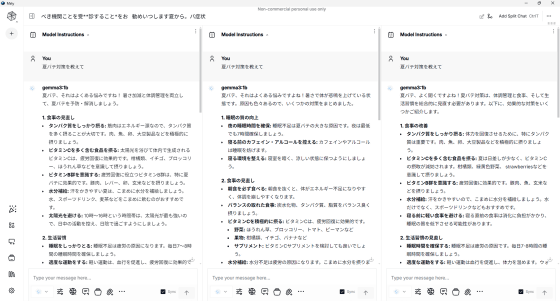

The number of splits increases each time you click 'Add Split Chat.' This means you can use it to 'display the output of multiple AIs simultaneously to get diverse opinions' or 'compare the performance of multiple AIs.'

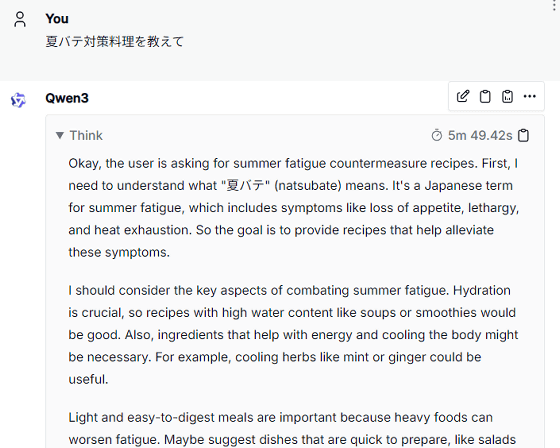

For inference models such as Qwen3, you can also check the inference content.

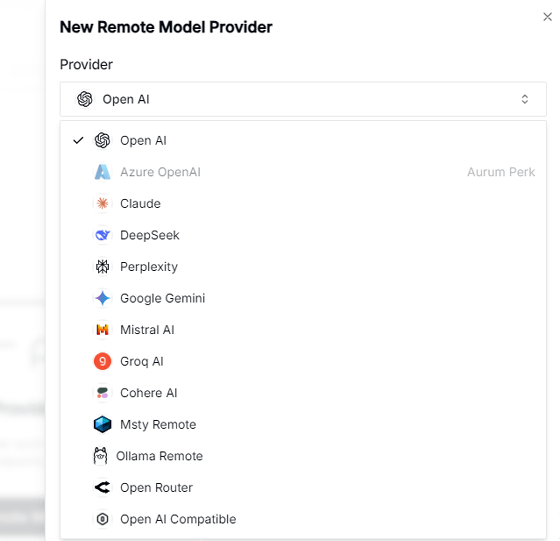

You can also register and chat with APIs such as OpenAI, Anthropic, DeepSeek, Perplexity, and Google.

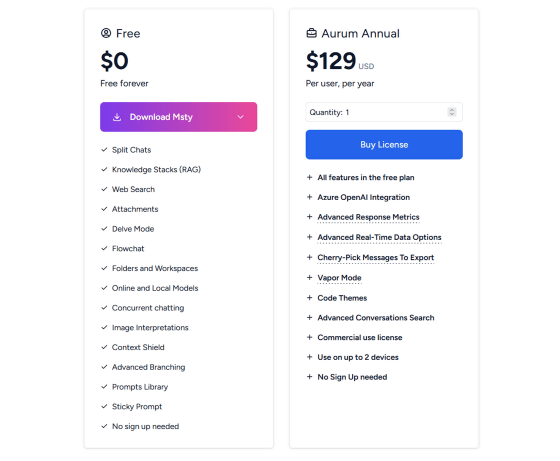

Msty's basic functions are available for free, and a subscription plan of $129 (approximately 19,000 yen) per year is also available, which allows you to use features such as 'the ability to check API usage details' and 'the ability to use real-time information as a source.'