Research shows that ChatGPT was confused by President Trump's speeches, as it had difficulty interpreting emotive metaphors

By

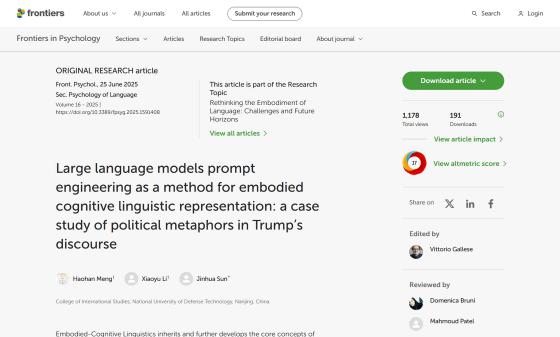

A study analyzing speeches by U.S. President Donald Trump using ChatGPT-4 (GPT-4) found that large-scale language models like GPT-4 have difficulty interpreting the emotive metaphors that President Trump frequently uses.

Frontiers | Large language models prompt engineering as a method for embodied cognitive linguistic representation: a case study of political metaphors in Trump's discourse

https://www.frontiersin.org/journals/psychology/articles/10.3389/fpsyg.2025.1591408/full

Trump's speeches stump AI: Study reveals ChatGPT's struggle with metaphors

https://www.psypost.org/trumps-speeches-stump-ai-study-reveals-chatgpts-struggle-with-metaphors/

Large-scale language models are trained to interpret and generate natural language using training data from books, websites, etc. As a result, large-scale language models like GPT-4 can generate essays, summarize long documents, answer questions, and converse with humans.

However, large-scale language models do not understand words like humans do; they simply use pattern recognition to predict 'which word is likely to come next in a sentence.' While this system often works well, large-scale language models can also misinterpret meanings, especially when words are abstract or emotional.

A research team from the University of National Defense Technology of the People's Liberation Army of China used a GPT-4 framework to analyze four speeches delivered by President Trump from mid-2024 to early 2025. The four speeches used in the study were the ' Republican nomination acceptance speech after the assassination attempt ,' the ' victory speech in the presidential election,' the 'presidential inauguration speech ,' and the ' speech to a joint session of Congress .'

By

Trump's speeches are characterized by their heavy use of emotional and ideologically driven language and the use of metaphors to frame political issues in a way that resonates with his supporters. The research team applied a method called critical metaphor analysis to GPT-4, which involves a step-by-step process of 'understanding the context of the speech,' 'identifying potential metaphors,' 'classifying metaphors by theme,' and 'explaining the emotional and ideological impact of the metaphors.'

As a result of the analysis, GPT-4 was able to correctly identify metaphorical expressions in 119 of the 138 sample sentences, with an accuracy of about 86%. However, upon closer inspection of the breakdown, it was reported that several problems with GPT-4's inference were repeatedly found.

One of the most common mistakes was confusing similes , which are expressions that express a metaphor, such as 'it's like ____,' with metaphors, which do not. For example, the sentence 'Washington DC, which is a horrible killing field' is a similes that expresses Washington DC with the word 'killing field,' but GPT-4 interpreted this as a metaphor.

In another case, the study determined that the sentence 'a series of bold promises' was figurative and classified it as a spatial metaphor, even though that wasn't the case. It also misidentified certain names and technical terms as metaphors, such as treating the Israeli missile defense system ' Iron Dome ' as a metaphor.

In this study, we also examined the ability of GPT-4 to classify the metaphors it identified into categories such as 'force,' 'movement and direction,' 'health and disease,' and 'human body.' We found that while GPT-4 was able to correctly classify metaphors in commonly used categories such as 'force' and 'movement and direction,' its classification accuracy was quite low for metaphors in less common categories such as 'plants,' 'cooking,' and 'food.'

A series of research results suggest that while GPT-4 is good at capturing superficial patterns, it lacks the ability to capture meaning in context. Large-scale language models like GPT-4 cannot rely on real-life experience, cultural knowledge, or emotional nuances to understand language, so they may not be able to understand sentences that are rich in political metaphors, such as President Trump's speeches.

The researchers conclude that while large-scale language models show promise in analyzing metaphors, they are still a long way from replacing human expertise.

PsyPost, a psychology media outlet, said, 'Large-scale language models are prone to misinterpretations, overinterpretations, and a lack of nuance, making them well-suited to aid researchers but not for fully automated analysis. Political metaphors in particular remain difficult for these systems to understand, as they often rely on shared cultural symbols, deep emotional resonance, and implicit ideological frameworks.'

Related Posts:

in Web Service, Science, Free Member, Posted by log1h_ik