The lecture 'Software is Changing Again' has caused a huge stir overseas. What was the shocking content?

Andrej Karpathy: Software Is Changing (Again) - YouTube

◆The concept of 'Software 3.0'

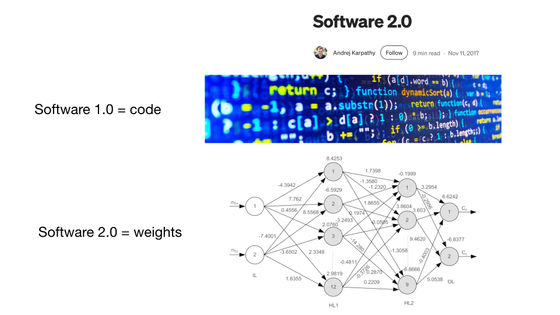

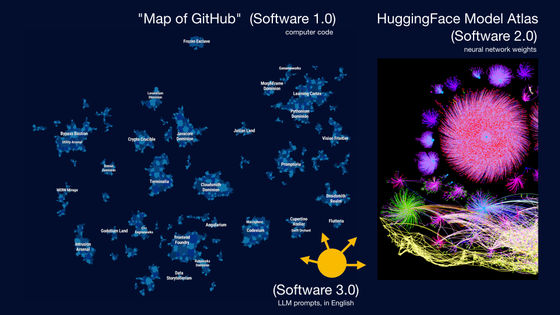

Karpathy believes that software has undergone two rapid transformations in the past. First came Software 1.0, which was the basic software that we all think of.

While Software 1.0 was code you wrote for computers, Software 2.0 is essentially neural networks, specifically the 'weights.' Rather than writing code directly, developers tweak a dataset and run optimization algorithms to generate the parameters for this neural network.

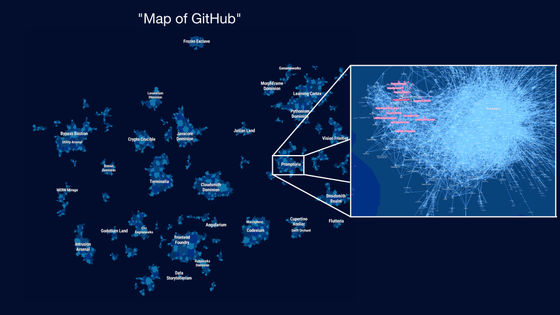

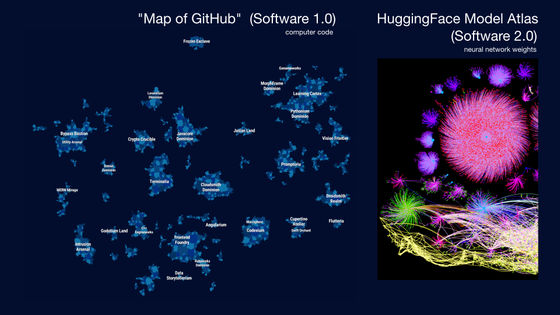

The projects on GitHub, which represents Software 1.0, can be represented as a '

Software 2.0 is similarly represented by

As generative AI becomes more sophisticated, even tuning of neural networks can be done with the help of AI. These can be done in 'natural language' rather than specialized programming languages. Karpathy calls the state where large-scale language models (LLMs) can be programmed in natural language, especially English, 'Software 3.0.'

To summarise, programming a computer with code is Software 1.0, programming a neural network with weights is Software 2.0, and programming an LLM with natural language prompts is Software 3.0.

'Perhaps GitHub code is no longer just code, but a new category of code that mixes code and English is expanding,' said Karpathy. 'This is not just a new programming paradigm, but it's also amazing that we're programming in our native language, English. We have three completely different programming paradigms, and it's really important to be familiar with all of these if you're going into the industry, because each has subtle pros and cons, and certain features might need to be programmed in 1.0, 2.0, or 3.0. Should we train a neural network, or send prompts to an LLM? Should the instructions be explicit code? So we all have the potential to make these decisions and actually move fluidly between these paradigms.'

◆AI is 'electricity'

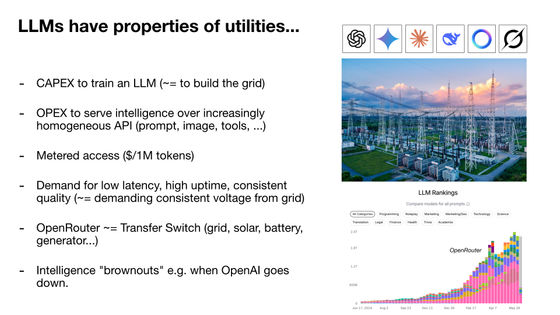

Karpathy believes that 'AI is the new electricity.' LLM labs such as OpenAI, Google, and Anthropic are investing in equipment for training, which is very similar to building an electric grid. Companies also incur operational costs to provide AI through APIs. Typically, they charge a fee per unit, such as 1 million cases. This API has various values, such as low latency, high uptime, and stable quality. In addition to these points, Karpathy believes that AI is an indispensable infrastructure like electricity, considering the phenomenon in the past where many LLMs went down and people were unable to work.

But LLM is not just like electricity or water; it is a more complex ecosystem. For operating systems, there are several closed-source providers like Windows and Mac, and open-source alternatives like Linux. A similar structure is forming for LLM, where open source providers compete with each other, and Llama could become the Linux of the LLM world.

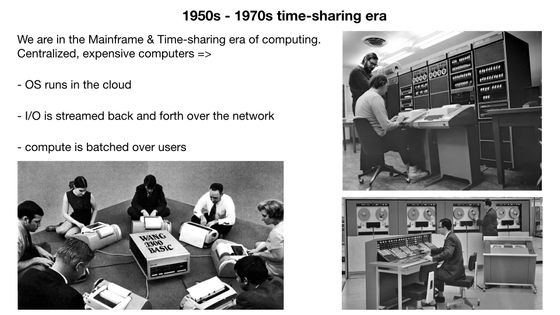

'I felt that LLM was like a new kind of OS. It plays the role of a CPU, and the length of the tokens that LLM can process (context window) is equivalent to memory, and it adjusts memory and computational resources to solve problems. Because it utilizes all of these functions, from an objective point of view, it is very similar to an OS. With an OS, you can download and run software, but there are some LLMs that can do the same thing,' he said.

◆AI is still in development

The computational resources of LLM are still very expensive for computers, so most high-performance LLMs run on cloud servers. Although models such as

'The personal computer revolution hasn't happened yet because it's not economical. It doesn't make sense. But some people may be trying. For example, the Mac mini is very suitable for some LLMs. It's unclear what shape it will take in the future. Maybe you will invent the shape and mechanism for it,' Karpathy said.

Another feature is that the graphical user interface (GUI), which is commonplace on PCs, has only been partially implemented in LLM. Chatbots such as ChatGPT basically only provide a text input field. 'I don't think GUIs in the general sense have been invented yet,' Karpathy says.

◆ AI technology diffusion is in the opposite direction

Until now, PCs have followed a history of being developed by governments for military use, used by companies, and then widely used by users. On the other hand, AI is widely used by users, not by governments or companies, and its collective intelligence is systematized and used by companies. 'In fact, companies and governments are not keeping up with the speed at which we are adopting technology. This is a reversal,' said Karpathy. 'What's new and unprecedented is that LLM is not in the hands of a few people or companies, but in the hands of all of us. Because we all have computers, it's all software, and ChatGPT was delivered to billions of people's computers instantly, overnight. This is incredible.'

◆ Humanity is in a cooperative relationship with AI

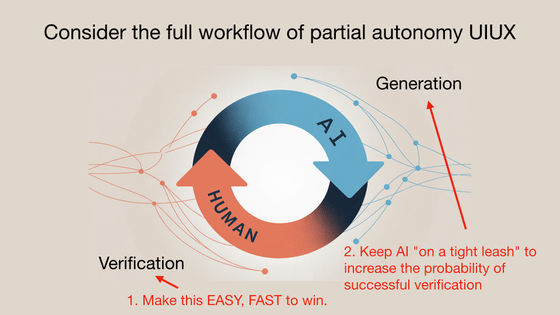

When AI is used, the AI usually generates data and we, the humans, verify it. Speeding up this loop as much as possible is beneficial for both humans and AI.

One way to achieve this, says Karpathy, is to significantly speed up verification. This could be achieved by introducing a GUI. Reading long texts alone is a time-consuming process, but looking at pictures or other non-text elements makes it easier.

The second point is that AI needs to be kept under control. Karpathy points out that 'many people are overly excited about AI agents,' and says that it is important not to believe all of the AI's output, but to check whether the AI is doing the right thing and whether there are any security issues. LLM is basically a machine that just puts together plausible words in a plausible way, and the output results are not always correct. It is important to always verify the results.