NVIDIA unveils new technology that reduces VRAM usage of the image generation AI 'Stable Diffusion 3.5 Large' by 40% to 11GB, exceeding 18GB

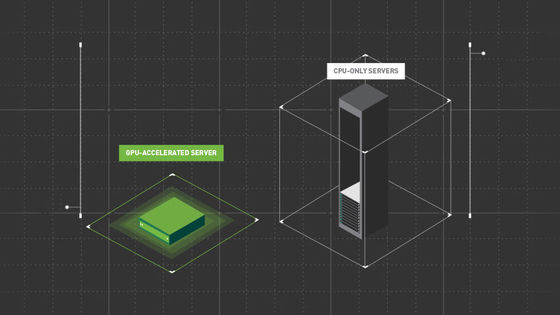

The performance of generative AI is improving every day, but to run better AI, more

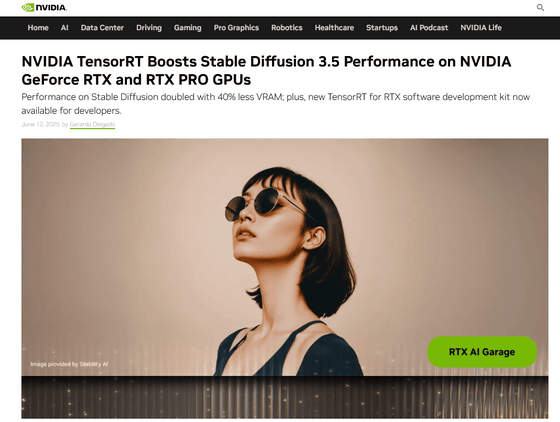

TensorRT Boosts Stable Diffusion 3.5 on RTX GPUs | NVIDIA Blog

https://blogs.nvidia.com/blog/rtx-ai-garage-gtc-paris-tensorrt-rtx-nim-microservices/

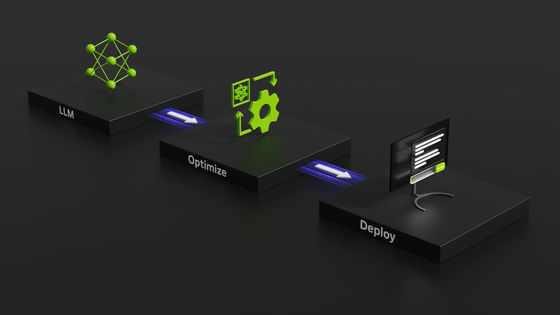

NVIDIA has collaborated with Stability AI , an AI company known for Stable Diffusion, to successfully 'quantize' the latest model, Stable Diffusion 3.5 Large. Quantization is a technique for reducing the size of data by reducing its precision. For example, a 32-bit floating point (FP32) can express a fine value such as 0.123456, but when converted to an 8-bit integer (INT8), it is converted to an integer between 0 and 255. This reduces precision, but the data size is reduced by a quarter, which reduces memory usage, improves calculation speed, and reduces power consumption for more efficient calculations.

NVIDIA has succeeded in 'quantizing' generative AI models using NVIDIA TensorRT , a tool that optimizes deep learning inference, and RTX GPUs equipped with Tensor cores.

According to NVIDIA's announcement, for the base model of Stable Diffusion 3.5 Large, which uses more than 18GB of VRAM, by quantizing to FP8, it is possible to reduce the VRAM requirement by 40% to 11GB. In addition, it seems that it has achieved a performance improvement of 2.3 times for Stable Diffusion 3.5 Large and 1.7 times for Medium.

Specifically, if you use more than 18GB of VRAM, you will need a graphics card costing around 200,000 yen to run it stably. However, if you lower the VRAM requirement to 11GB, you may be able to use a graphics card with 12GB of VRAM like the one below for around 50,000 yen without any problems.

Amazon.co.jp: ASUSTek NVIDIA RTX3060 with Axial-tech fan and 2-slot design DUAL-RTX3060-O12G-V2: Computers & Peripherals

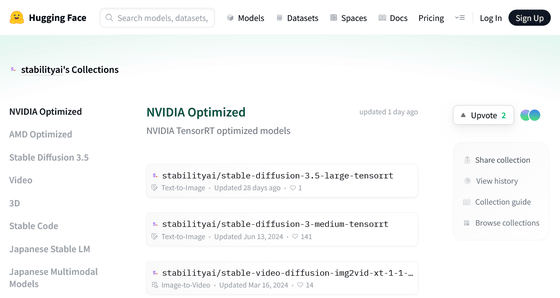

The optimized model is publicly available on Stability AI's Hugging Face page.

NVIDIA Optimized - a stabilityai Collection

https://huggingface.co/collections/stabilityai/nvidia-optimized-684937de2f047a43a8b5456b

NVIDIA and Stability AI are also collaborating to release Stable Diffusion 3.5 as an NVIDIA NIM microservice , making it easier for creators and developers to access and deploy models for a wide range of applications. The NIM microservice is scheduled for release in July 2025.

Related Posts: