Apple announces research into AI model that analyzes voice to estimate user heart rate, potentially improving AirPods heart rate measurement

Apple has published a research paper on machine learning research titled 'Hidden Representation of a Basic Model for Estimating Heart Rate by Auscultation.' This paper examines whether an AI model that has not been trained for estimating heart rate can accurately estimate heart rate. This research suggests that by using AirPods and an AI model, it may be possible to measure heart rate without a dedicated sensor in the future.

Foundation Model Hidden Representations for Heart Rate Estimation from Auscultation - Apple Machine Learning Research

Foundation Model Hidden Representations for Heart Rate Estimation from Auscultation

https://arxiv.org/html/2505.20745v1

Apple study shows how AirPods might double as AI heart monitors - 9to5Mac

https://9to5mac.com/2025/05/29/apple-heart-monitor-ai/

AI models analyzing audio from AirPods could detect heart rate

https://appleinsider.com/articles/25/05/29/ai-models-analyzing-audio-from-airpods-could-determine-a-users-heart-rate

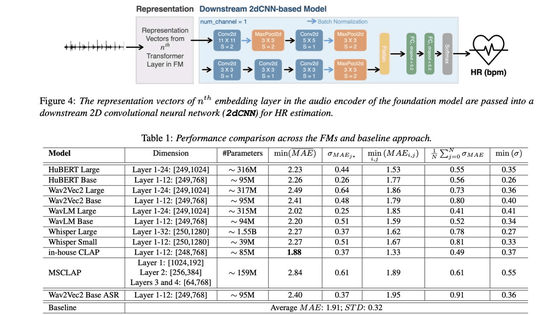

The research team investigated whether six popular baseline models trained on normal speech and conversation could accurately estimate heart rate from a phonocardiogram, including Whisper , a voice transcription model.

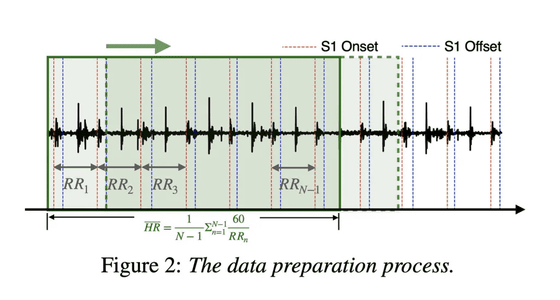

In the study, each AI model processed approximately 20 hours of phonocardiogram recordings in total, rather than audio recordings. The heart sound data was part of a publicly available heart sound dataset called CirCor DigiScope Phonocardiogram . Apple split the heart sound data into short clips of approximately 5 seconds each, which were then processed by each AI model.

Importantly, even though these underlying models were not designed for health data, the results were remarkably robust: Most of the underlying models studied were able to estimate heart rate with accuracy comparable to traditional methods that rely on hand-constructed audio features, which have long been used in traditional machine learning models.

In addition, Apple's in-house AI model, Contrastive Language-Audio Pretraining (CLAP), outperformed the average performance of the other baseline models surveyed and performed best overall.

'Our CLAP model's representations from the speech encoder achieved the lowest mean absolute error (MAE) across the various data splits, outperforming baseline models trained on standard acoustic features,' Apple wrote in its results.

The following table summarizes the MAE of each AI model (Whisper, wav2vec2, wavLM) and CLAP. CLAP recorded better performance (MAE: 1.88) than the other AI models.

The study also revealed that larger parameter sizes in AI models do not necessarily translate to better performance: existing AI models with large parameter sizes have been reported to encode less useful cardiopulmonary function information, possibly due to language optimization.

One key takeaway from this research is that combining traditional signal processing with next-generation AI can provide more reliable heart rate estimation, meaning that if one method doesn't work, the other is likely to be able to fill the gap.

The team plans to continue refining their AI model for health-related applications, develop a lightweight version that can run on low-power devices, and explore other body-related sounds that are worth listening to.

The study makes no clinical claims or promises of production, but it's entirely possible that Apple could build these AI models into its iPhones, Apple Watches, and AirPods.

Related Posts:

in Software, Posted by logu_ii