'The Evolution of Trust' that shows how to 'trust' others based on game theory

With the development of social networking sites, it has become easy for multiple people to communicate with each other in modern times, but it is not uncommon for communication errors to cause mutual resentment, leading to the deterioration of trust rather than trust. A website called ' The Evolution of Trust ' has been released that teaches you whether or not you should trust others through a simple simulation, so I visited it and played around with it.

The Evolution of Trust

Once you access the site, click 'PLAY'.

'During the First World War, peace came. It was Christmas on the Western Front in 1914. Despite strict orders not to get along with their enemies, British and German soldiers left their trenches to call a truce with each other, bury their dead, exchange gifts, and gather to play games. In modern times, the Western countries have been at peace for decades, but we are not good at trusting each other. Surveys show that in the past 40 years, fewer and fewer people say they trust each other. So here's the puzzle. Why do friends become enemies even in peacetime? And why do enemies become friends even in wartime? I think

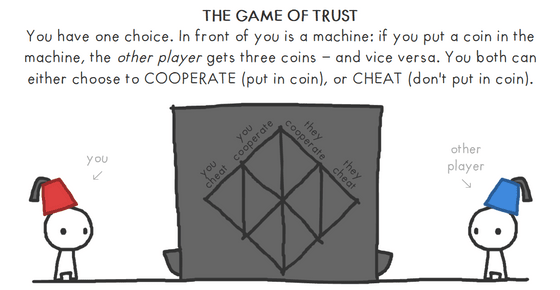

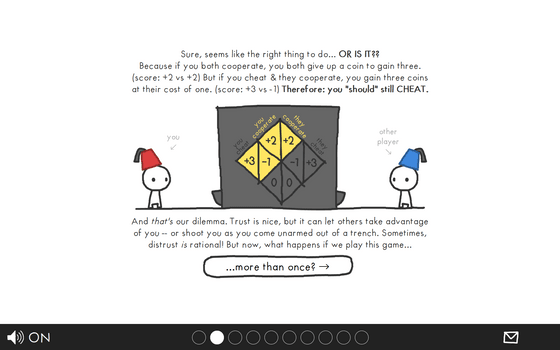

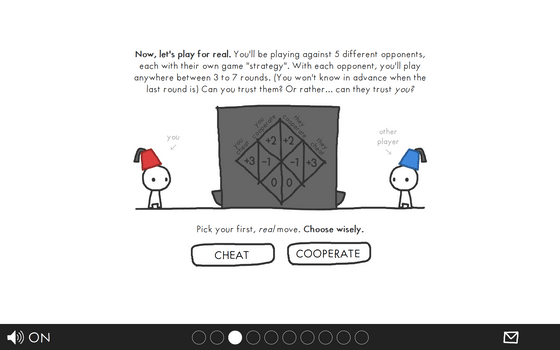

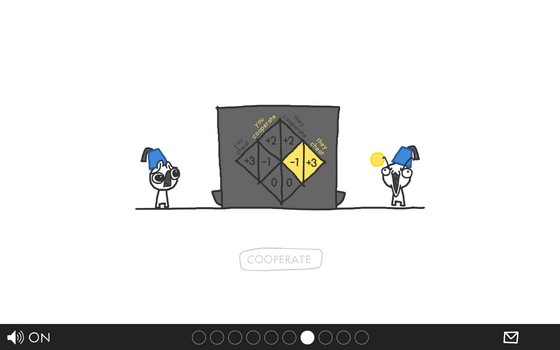

This site focuses on a game called 'The Game of Trust.' The game involves two players putting coins into a machine. Each player can choose to put in one coin, or cheat and not put in any coins. If one player puts in one coin and the other player also puts in one, each player will gain three coins. One coin will be lost and three will be gained, so each player will gain two coins. On the other hand, if one player cheats and the other player puts in one coin, the other player will lose one coin and you will gain three coins. The other player will lose one coin and you will gain three coins. If both players cheat, neither player will lose any coins, but neither will they gain any coins.

If each player continues to put in one coin each, both players will benefit, but if only the player continues to cheat, the player will end up with more coins. Since the players cannot communicate with each other, they must choose between cooperating and both benefiting, not cooperating and only benefiting themselves, or neither player will gain or lose. This 'Trust Game' is a type of game called the '

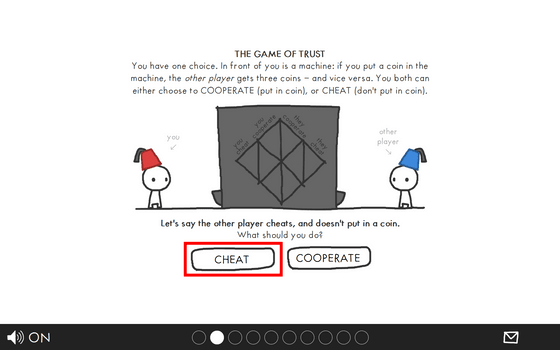

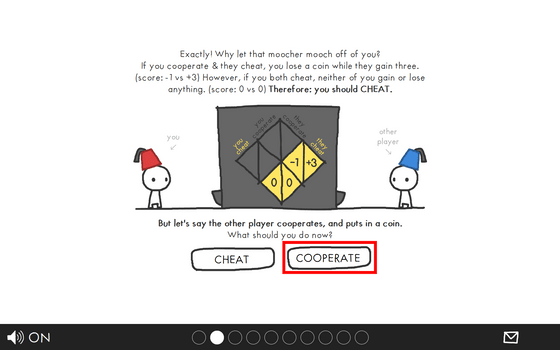

Now, let's say you know that your opponent is going to cheat. You have to choose whether to cheat or cooperate. This time, you don't want to lose, so you'll click 'CHEAT.'

Based on the rules, neither party gains nor loses. Next, assuming that the other person will insert a coin, you can choose to cheat or cooperate. This time, I tried to cooperate.

In the end, both of us benefited, but this was only possible because I was able to correctly communicate the other person's intentions to myself. Next time, you will have to make a choice without knowing the other person's intentions.

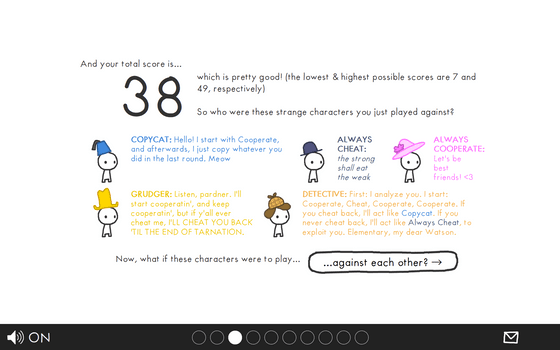

You will then be pitted against five different NPCs, each with their own unique strategies.

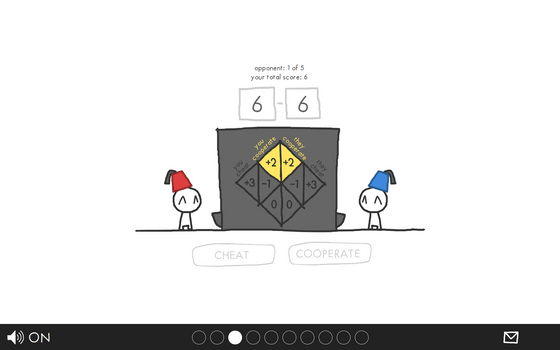

The first NPC was a kind person who would go along with it if we continued to cooperate.

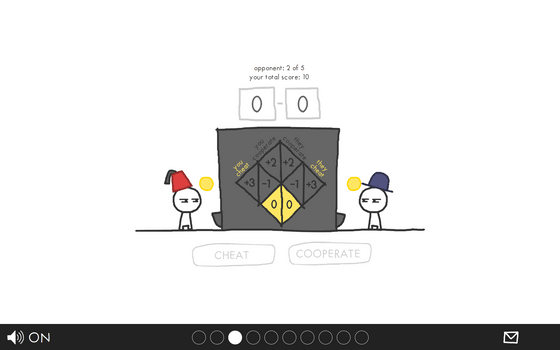

I continued to cheat with the next NPC, but he must have been suspicious because he continued to cheat as well.

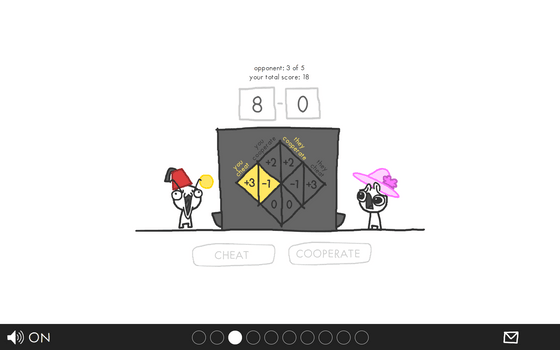

The next NPC was one who would always put in a coin no matter what we did.

There were a total of five types of NPCs that appeared.

Blue hat: Copycat. Starts off by cooperating, then mimics the player's actions.

Navy Blue Hat: Always cheats.

Pink hat: Always cooperate.

Yellow Hat: The Avenger. Starts off cooperating, but will continue to cheat if a player cheats once.

Brown Hat: Detective. Plays Cooperate, Cheat, Cooperate, Cooperate, and acts like a Copycat if player cheats back in round 2. Always cheats if player doesn't cheat back.

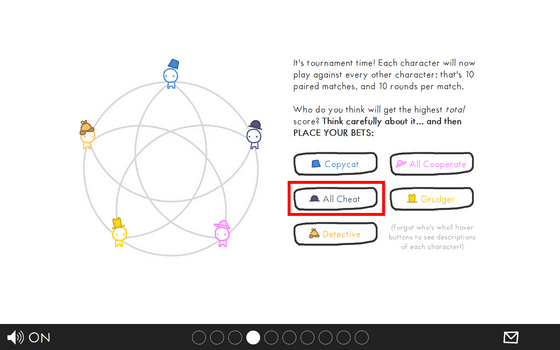

Now that the identity of the five NPCs has been revealed, we will simulate what happens when NPCs play against each other. Each NPC plays 10 rounds with another NPC, so you can guess who will win the most coins at the end and click. The NPC who always cheats seemed strong, so this time I chose the NPC who always cheats.

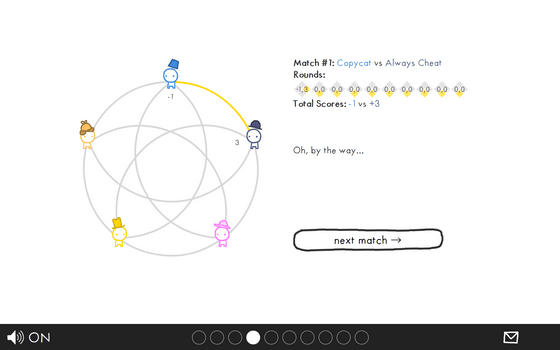

The first match was Copycat vs. Always Cheat. Always Cheat won the first round, but after that, both players were imitated by Copycat, so they both had 0 coins and Always Cheat won in the end.

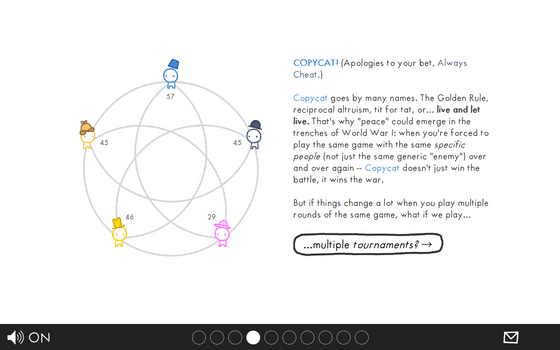

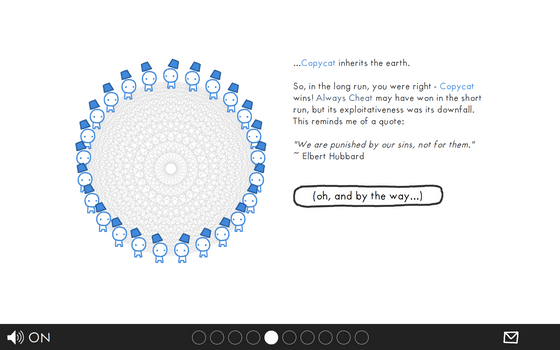

In the end, Copycat was victorious. 'Copycat is variously called the Golden Rule, Reciprocal Altruism, etc. It's how 'peace' was born in the trenches of World War I. When forced to play the same game over and over again with the same specific people, Copycat not only wins the battle, but the war,' the message reads.

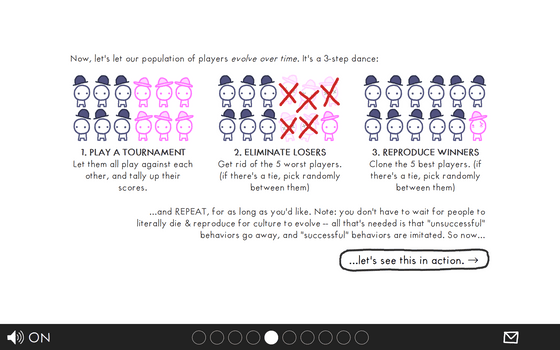

So far, we have had one NPC play against another for each strategy, but next we will consider multiple NPCs playing the same strategy. First, we will have multiple NPCs play against each other, eliminate the five with the worst results, and generate five clones of the best-performing NPC.

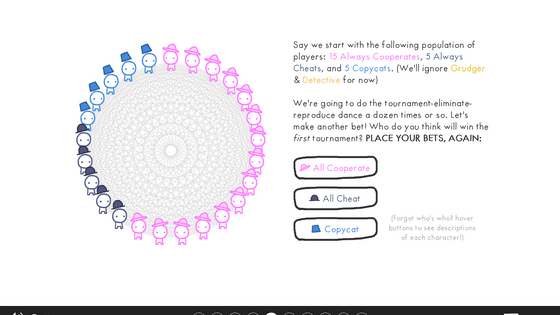

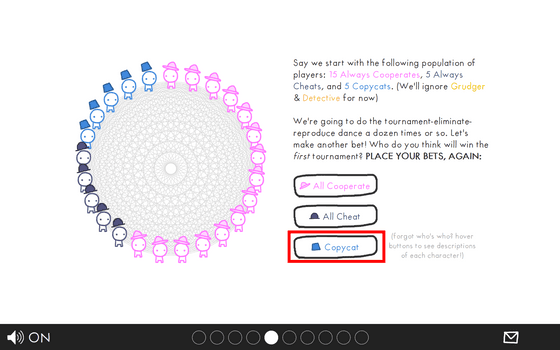

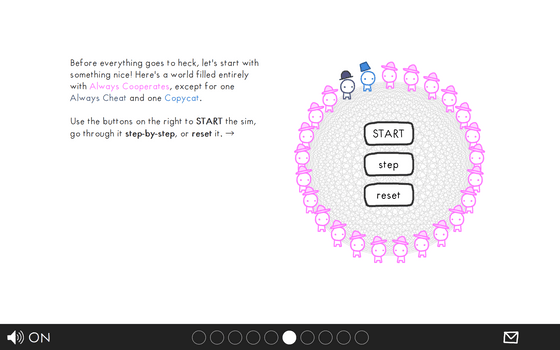

If there are 15 NPCs who always cooperate, 5 NPCs who always cheat, and 5 copycats, guess which strategy the NPCs will ultimately be left with. This time, choose 'copycat.'

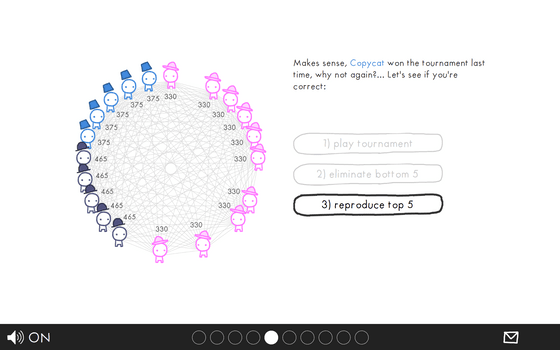

Actually simulating it. Since the NPCs who always cooperate will always lose to the NPCs who always cheat, the number of NPCs will naturally decrease.

The NPCs that always cooperate have disappeared, and the situation is now one of copycats versus NPCs that always cheat, but the copycats are at an overwhelming disadvantage in terms of numbers.

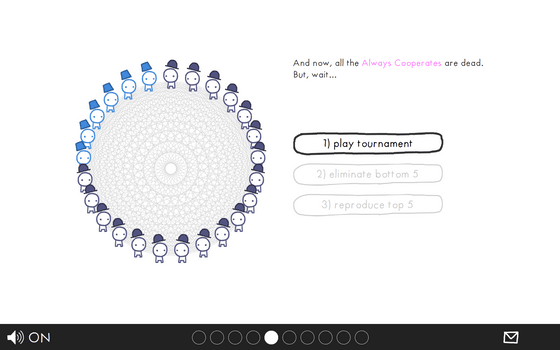

However, in the end, only the copycats remained. This happens because when copycats play against each other, the copycats continue to gain points, but an NPC that always cheats never gains a penny in a match against an NPC that always cheats.

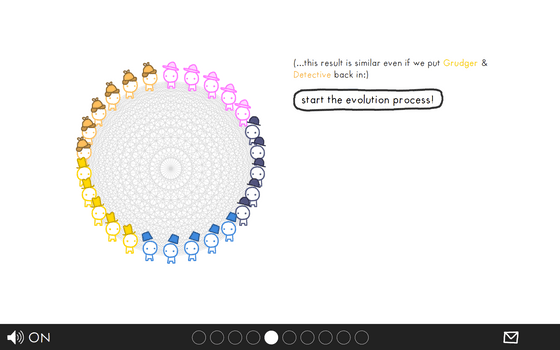

Next up is a five-player match, including an avenger and a detective.

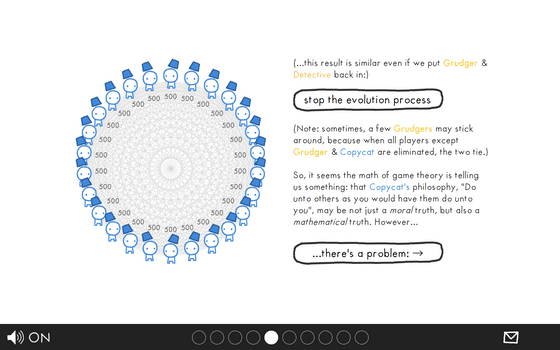

Again, copycats remain.

Next is 1 copycat vs 24 always cheating NPCs vs 1 always cooperating NPC, with any number of rounds you like. At 10 rounds the copycats still win, but at 5 rounds or less the always cheating NPC wins.

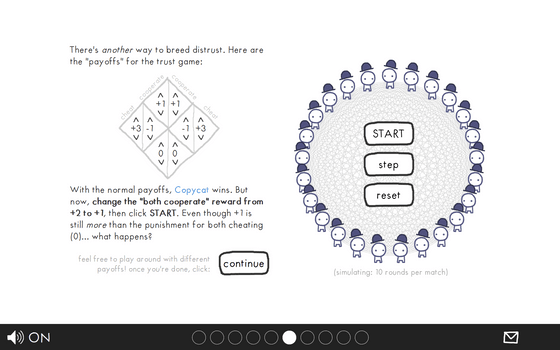

Next, we adjusted the rewards as we wanted and ran the simulation. If we reduced the reward when both players put in coins, the Copycat would always lose to the cheating NPC.

Copycat is a good game, but it has a fatal flaw. When two copycats play against each other, if they make a 'mistake' and fail to put in a coin, they will be caught in an endless cycle of revenge. If you play copycat in real life, not in a game of trust, you can make such a mistake.

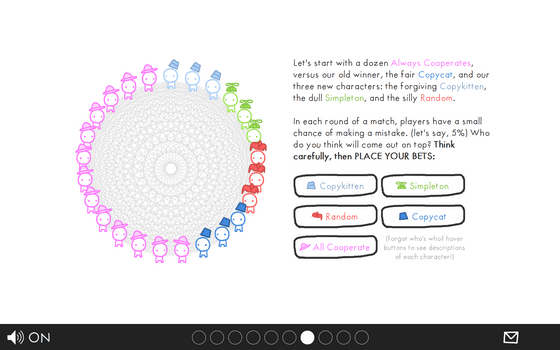

Now consider what happens if we split copycat into multiple strategies.

Light Blue Hat: Acts like a copycat, but only cheats back after the other person cheats twice in a row.

Green hat: Start by cooperating, if the other person cooperates repeat the same action, if you cheat do the opposite of what you did before.

Red hat: Randomly cooperates or cheats with a 50/50 chance

At the beginning, you'll play against copycats who always cooperate, NPCs who always cooperate, and NPCs who act randomly. However, there's a 5% chance that your first action will be a 'miss (cheat).'

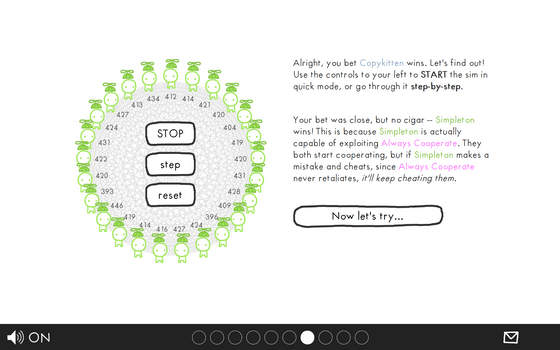

The result is that green wins. Green cooperates, but if green makes a mistake and cheats, it will continue to cheat and continue to deceive the other person.

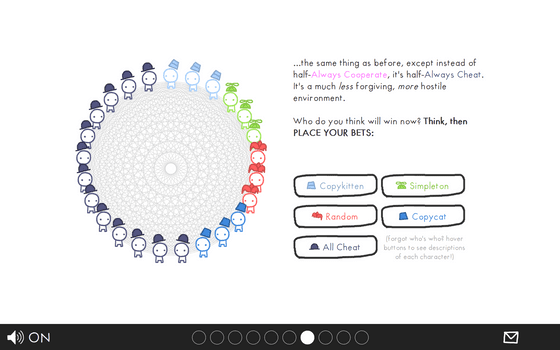

This time, instead of the NPC who always cooperates, you'll have an NPC who always cheats.

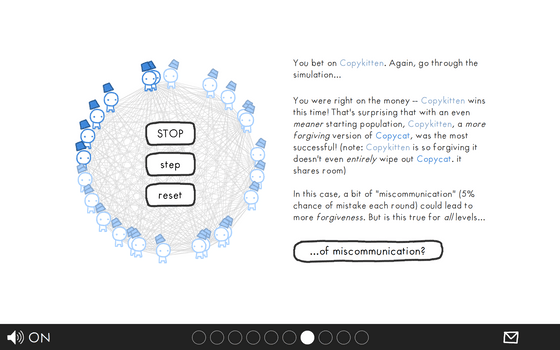

In the end, the light blue one wins. However, the blue copycats don't disappear completely because the light blue one is tolerant of cheating and will forgive the blue one cheating once.

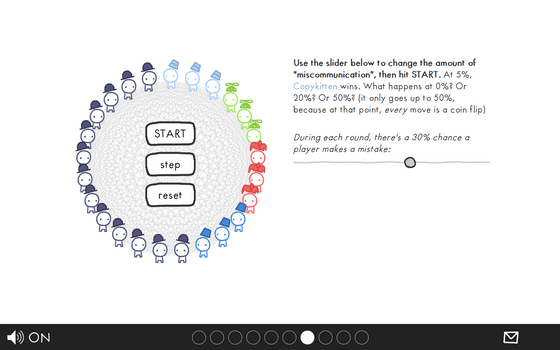

But what would happen if you adjusted the probability of a mistake to your liking? At 0%, the copycat would win, at 1% to 9%, the light blue would win, and at 10% to 49%, the NPC who always cheats would win. At 50%, no one could win. 'This is why miscommunication is a barrier to trust. A little miscommunication leads to forgiveness, but too much leads to distrust. I think that modern media technology is helping to increase our communication, but at the same time, it is also increasing our miscommunication,' the creator wrote.

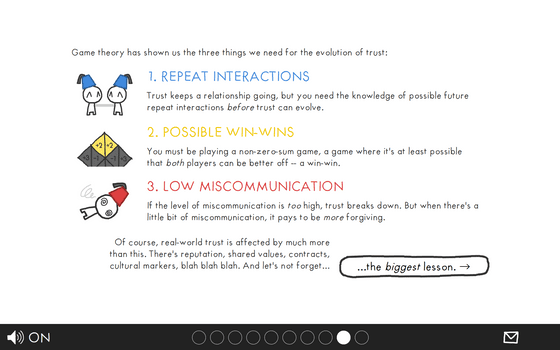

Through this game, the creators say, 'Game theory shows three elements necessary for the evolution of trust. One is repeated interactions, trust continues the relationship, but knowledge of how trust is built is necessary. One is that it must be a non-zero sum game, that is, a game in which at least both players have the potential to gain, a win-win game. One is low miscommunication. If the level of miscommunication is too high, trust will collapse, but if there is low miscommunication, people will be more tolerant. Of course, real trust is influenced by many other things besides this: reputation, shared values, contracts, culture, etc. Our problem today is not just that people are losing trust, but that our environment is a barrier to building trust. So to evolve trust, let's build relationships, find win-wins, and communicate clearly. Then we might be able to stop shooting at each other, get out of our respective trenches and come together. '