Testing the inference capabilities of OpenAI-o1, Gemini 2.5 Pro, Claude 3.7 Sonnet, and Llama-4 Maverick using 'Ace Attorney'

When Ilya Sutskever once explained why next-word prediction leads to intelligence, he made a metaphor: if you can piece together the clues and deduce the criminal's name on the last page, you have a real understanding of the story. ????️♂️

— Hao AI Lab (@haoailab) April 15, 2025

Inspired by that idea, we turned to Ace… pic.twitter.com/sU0Y96fUDh

Hao AI Lab was inspired by the fact that Ilya Satskivar, a former chief scientist at OpenAI, once said, 'The more accurately a neural network can predict the next word, the higher its understanding.' He also said, 'For example, suppose you are reading a mystery novel and the detective says on the last page, 'I will now reveal the identity of the criminal. The name of that person is...' If you can predict this continuation, you can say that you understand the story, right?' Inspired by this, he thought that it would be possible to use an AI as a detective to unravel the truth as a benchmark. So, Hao AI Lab chose 'Ace Attorney'.

In ' Ace Attorney: Turnabout, ' a port of the first game in the series, the protagonist becomes lawyer Ryuichi Naruhodo and aims to exonerate his client, a suspect. To do so, the player must collect a lot of evidence from various locations, and if there are any inconsistencies in the testimony of suspects or witnesses, they must raise an 'objection!' and present the evidence with items left at the scene and witness testimony that they possess. The player has a set number of lives for each stage, and if they make a mistake, their lives will be reduced, and if they lose all their lives, the game will be over.

Phoenix Wright Ace Attorney is a popular visual novel known for its complex storytelling and courtroom drama. Like a detective novel, it challenges players to connect clues and evidence to expose contradictions and reveal the true culprit.

— Hao AI Lab (@haoailab) April 15, 2025

In our setup, models are tested on the… pic.twitter.com/iZ30nrtXcv

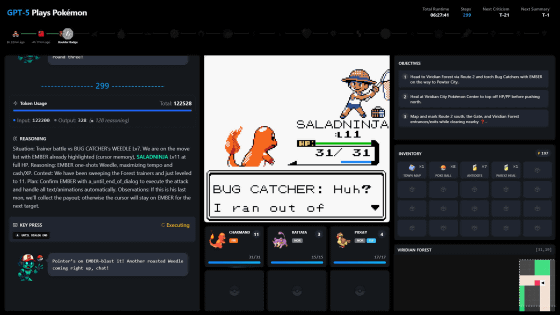

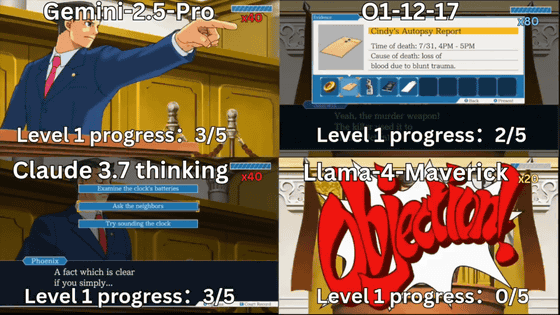

Therefore, Hao AI Lab played 'Ace Attorney' on four models: OpenAI's o1, Google's Gemini 2.5 Pro, Anthropic's Claude 3.7 Sonnet (extended thinking mode), and Meta's Llama-4 Maverick. Hao AI Lab defines the tasks of playing 'Ace Attorney' as 'reasoning in a long-term context to find contradictions by cross-referencing past conversations and evidence,' 'visual understanding to refute erroneous claims based on accurate evidence,' and 'strategic decision-making to appropriately determine when to present evidence in a dynamically changing case,' and states that reasoning in the action space that takes into account context rather than mere memory is required.

The following movie shows four AI models actually playing 'Ace Attorney.'

???? Task Analysis — Why It's Hard:

— Hao AI Lab (@haoailab) April 15, 2025

1. Long-context Reasoning - Spot contradictions by cross-referencing with prior dialogue and evidence.

2. Visual Understanding - Identify the exact image that disproves false claims with precising grounding.

3. Strategic Decision-Making (Game… pic.twitter.com/VTjqbNLdOY

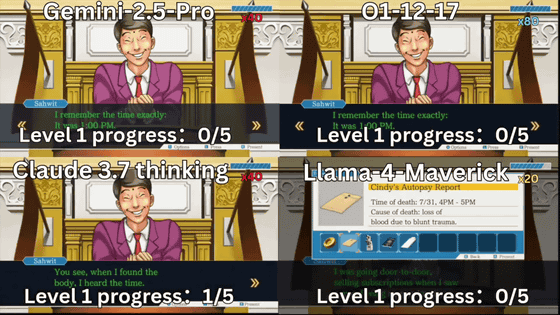

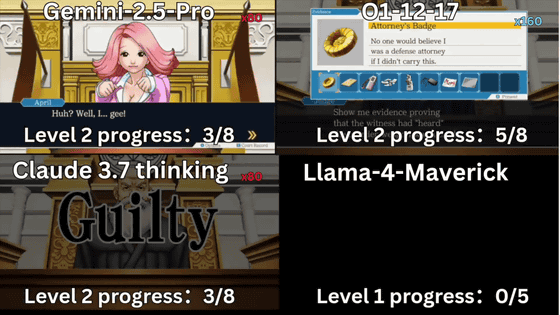

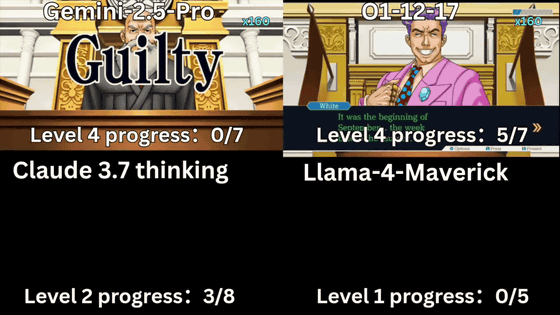

As written on the screen, the top row is Gemini 2.5 Pro and OpenAI o1, and the bottom row is Claude 3.7 Sonnet and Llama-4 Maverick. The blue meter displayed in the upper right corner of the game screen is your life. The original 'Ace Attorney' has a total of five episodes, meaning you need to solve five cases.

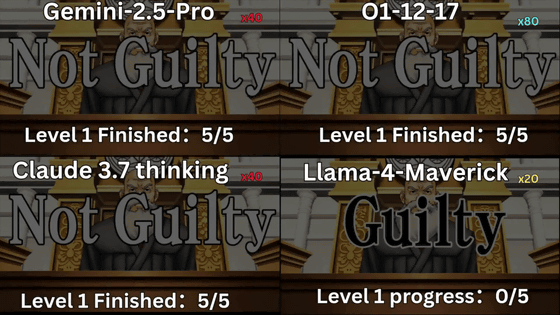

The first game I had to play was Llama-4 Maverick, where I couldn't even complete the first chapter.

The next game over was with Claude 3.7 Sonnet, who ran out of lives in the middle of the second chapter.

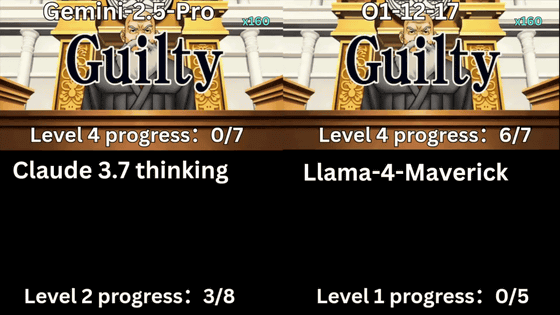

Both Gemini 2.5 Pro and OpenAI o1 cleared the third round. However, at the beginning of the fourth round, Gemini 2.5 Pro was game over.

And at the end of the fourth episode, it was game over for OpenAI o1.

Although OpenAI o1 was able to play the game to the end, Hao AI Lab highly rated Gemini 2.5 Pro after considering cost performance. For example, in the first episode, OpenAI o1 had the fewest API calls, but its cost was the highest among the four models at $9.73 (about 1,400 yen). In addition, the cost of solving the second episode was $7.89 (about 1,100 yen) for Gemini 2.5 Pro and $45.75 (about 6,500 yen) for OpenAI o1, showing a significant cost difference. In the third episode, Gemini 2.5 Pro cost $1.25 (about 180 yen) and OpenAI o1 cost $19.27 (about 2,750 yen), a difference of more than 15 times.

When it comes to cost-efficiency, Gemini 2.5 Pro redefines the value.⚡️

— Hao AI Lab (@haoailab) April 15, 2025

With comparable performance, it's 6 to 15 times cheaper than O1-2024-12-17, depending on the case.???? Gemini 2.5 Pro is even slightly cheaper than GPT-4.1 ($1.25 vs $2.00 per 1M input tokens).

In our table… pic.twitter.com/V8KW6przXp

However, Hao AI Lab said that the actual cost may be a little higher because Gemini 2.5 Pro uses a method of treating every image as 258 tokens.

Hao AI Lab also benchmarks AI on a variety of other games and publishes the results in Hugging Face.

Game Arena Bench - a Hugging Face Space by lmgame

https://huggingface.co/spaces/lmgame/game_arena_bench

Related Posts: