DeepSeek and Tsinghua University Researchers Announce New Method to Enhance LLM Inference Capabilities

Researchers at DeepSeek, a Chinese AI startup that develops DeepSeek-R1 and other AI apps, have developed a new approach to improve the inference capabilities of general large-scale language models (LLMs) and published a pre-peer-reviewed paper on the preprint server arXiv.

[2504.02495] Inference-Time Scaling for Generalist Reward Modeling

DeepSeek unveils new AI reasoning method as anticipation for its next-gen model rises | South China Morning Post

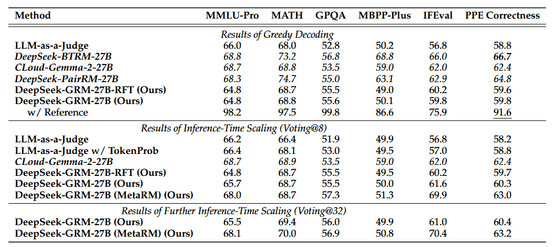

According to the paper, DeepSeek, in collaboration with researchers from Tsinghua University, developed a technology that combines Generative Reward Models (GRM) and a technique called Self-Principled Critique Tuning (SPCT). SPCT is a new approach developed by DeepSeek researchers based on GRM.

The team says that this technology can be used to set clear, high-quality 'rewards' that can flexibly respond to a variety of inputs, significantly reducing inference time and providing better results faster.

When they tested a model called 'DeepSeek-GRM' that incorporates the new technology, it reportedly achieved high scores in several benchmarks.

DeepSeek plans to open source the GRM model, but has not said when.

DeepSeek attracted a lot of attention when it announced the inference model 'DeepSeek-R1' in January 2025. Reuters reports that the next-generation model 'DeepSeek-R2' may be released by May 2025.

DeepSeek to announce further enhanced AI model 'DeepSeek-R2' ahead of schedule by May 2025, and further announce discounts of up to 75% on API usage fees - GIGAZINE

in Software, Posted by log1p_kr