OpenAI issues statement on safety and integrity of AGI (Artificial General Intelligence)

OpenAI, which develops AI such as ChatGPT, aims to develop '

— OpenAI (@OpenAI) March 5, 2025

How we think about safety and alignment | OpenAI

https://openai.com/safety/how-we-think-about-safety-alignment/

OpenAI's mission is to 'ensure that AGI benefits all of humanity,' and this requires safety measures to mitigate the negative effects of AI and ensure its positive effects. OpenAI's understanding of AI safety has changed significantly over time, and this statement reflects our current thinking as of March 6, 2025.

In the past, OpenAI thought that the development of AGI would be 'at a certain point, AI would be able to solve world-changing problems,' but at the time of writing, it is only 'one point in the increasing usefulness of AI.' In other words, AGI is not something that will suddenly appear, but will appear little by little as AI steadily progresses.

If AGI were to suddenly emerge at a specific moment, we would need to handle AI systems carefully to ensure safety. This was the thinking behind OpenAI's approach when developing GPT-2, which delayed its release as it was deemed 'too dangerous.' But if AGI is something that is a continuous evolution, deploying AI models iteratively, while also giving society time to adapt as we gain a better understanding of safety and misuse, will help make the next AI model safer and more beneficial.

At the time of writing, OpenAI is developing a new paradigm called ' chain-of-thought models ,' which perform step-by-step inference, and is studying how to make the models useful and safe by learning through the use of chain-of-thought models by people in the real world.

OpenAI is developing AGI because it believes it has the potential to positively transform the lives of all people: 'Because most human improvements, from literacy to machines to medicine, involve intelligence, we believe that most challenges facing humanity can be overcome with sufficiently useful AGI,' OpenAI said in a statement.

OpenAI points out that today's AI systems have problems in three categories:

- Human abuse

OpenAI considers 'abuse' to be the use of AI by humans in ways that go against the law or democratic values, including the suppression of freedom of speech and thought through political bias, censorship, and surveillance. Phishing attacks and fraud are also areas where AI is misused.

- Integrity failure

Alignment failure occurs when AI behavior is not consistent with relevant human values, instructions, goals, or intent -- for example, AI may have negative effects that were not intended by users, influence humans to behave in ways they would not otherwise, or undermine human control.

Social unrest

AI is driving rapid changes in human society that could have unpredictable and sometimes harmful effects on the world and individuals, including social tensions, growing inequality, and shifts in dominant values and social norms. Furthermore, if AGI is developed, access to AGI could determine economic success, and there is a risk that authoritarian governments could misuse AGI.

OpenAI evaluates the current risks of AI and predicts and prepares for future risks. OpenAI introduces six basic principles that are the basis of its thinking and actions.

Embrace uncertainty

To know future challenges in AI, it is necessary to conduct real-world tests, not just theory, and to draw insights from stakeholders. To ensure safety, OpenAI is working to rigorously measure safety and threats and mitigate threats before potential adverse effects surface. In some cases, OpenAI says it may release AI only in controlled environments due to concerns about the risks, or release only tools that use AI, rather than the AI itself.

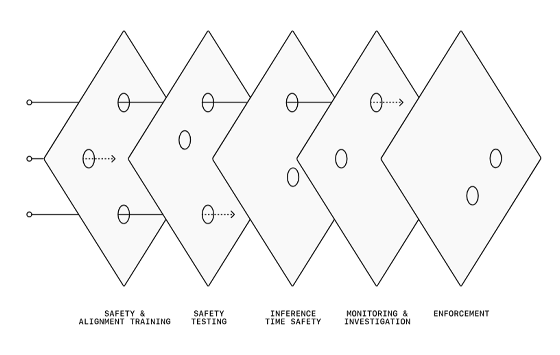

・Multi-layered defense

OpenAI believes that a single intervention is unlikely to be the solution to creating safe and beneficial AI, and so it is taking an approach that deploys multiple layers of defenses. For example, it provides a support layer to ensure safety at the time of training the model, tests components end-to-end, not just individually, and continues to monitor and investigate after deployment, and in some cases implements mandatory rules to ensure safety.

・Scaling method

OpenAI is exploring ways to improve intelligence and integrity as AI models evolve, and has already

Human control

OpenAI's approach to AI integrity is human-centered and aims to develop mechanisms that allow human stakeholders to effectively oversee AI in complex situations, including by implementing policies that incorporate public and stakeholder feedback in AI training, incorporating nuance and culture into AI models, and allowing for human intervention when necessary even in autonomous environments.

Community Initiatives

No single organization can ensure that AGI is safe and beneficial for all; open collaboration between industry, academia, government, and the general public is necessary. To that end, OpenAI will publish the results of its safety research, provide resources and funding to the field, and voluntarily work to ensure safety.

OpenAI said, 'We don't know all the answers, and we don't have all the questions. Because we don't know, we accept that we may be wrong in our expectations about how progress will unfold, or in our approaches to the challenges we see. We believe in a culture of healthy debate and seek feedback from people with different perspectives and ideas on AI risks, especially those who disagree with OpenAI's current position.'

Related Posts:

in Note, Posted by log1h_ik