Why AI language models become difficult to operate when there are too many tokens to process; computational cost is proportional to the square of the input size

Generative AI, such as ChatGPT, which interprets information obtained from humans and responds to them in a way that is easy for humans to understand, recognizes and processes information in units called 'tokens.' Some recent AI models can process millions of tokens at once, but some believe that this is still insufficient to process the specialized tasks required by humans. However, it is also true that there is a problem that the current AI becomes difficult to operate if the number of tokens that can be processed is increased. Technology media Ars Technica explains why it becomes difficult to operate.

Why AI language models choke on too much text - Ars Technica

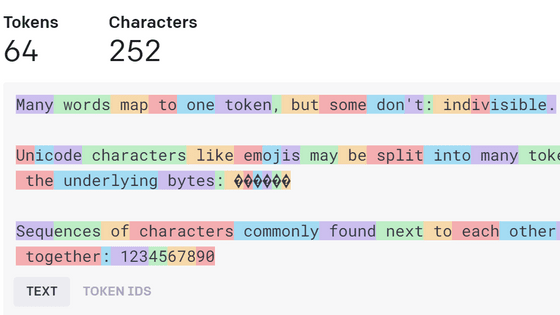

First of all, a 'token' is a unit of information processed by a large-scale language model, and in English, roughly one token is calculated per word. However, there are exceptions depending on the length of the word. For example, OpenAI's 'GPT-4o' processes 'the' and 'it' as one token, but 'indivisible' is divided into multiple parts such as 'ind', 'iv', and 'isible' and processed as three tokens. You can check which words are processed with what number of tokens in the following article.

'Tokenizer' that shows at a glance how chat AI such as ChatGPT recognizes text as tokens - GIGAZINE

Known as a pioneer of generative AI chat services, ChatGPT had a processing capacity of only 8192 tokens when it was first released. This was equivalent to about 6000 words of text, and if it was given more text than this, it would forget information in order from the beginning of the sentence.

This limit is being relaxed as AI models continue to improve over the years: GPT-4o can handle 128,000 tokens, Anthropic's Claude 3.5 Sonnet can handle 200,000 tokens, and Google's Gemini 1.5 Pro can handle 2 million tokens, which are more than enough to entrust AI with small tasks.

Still, there is still a lot of progress to be made if we want AI systems with human-level cognitive capabilities. Humans are creatures that take in a lot of information while they are active. For example, just by looking around, we can recognize and understand a variety of information in a complex way, such as the presence of an object, the object's color and shape, why the object is there, and how physical phenomena work. To achieve human-level intelligence, AI systems will need the ability to absorb a similar amount of information.

According to Ars Technica, the most common way to build a large-scale language model (LLM) system that handles large amounts of information is a technique called search-assisted generation (RAG). RAG is a method of generating information by searching external databases for documents related to a user's question, extracting the most relevant documents, generating tokens, and processing them together with the user's question.

According to Ars Technica, the mechanism by which RAG finds the most relevant documents is not very sophisticated, so for example, if a user asks a complex and difficult-to-understand question, RAG is likely to search the wrong documents and return the wrong answer. In addition, it cannot reason in a sophisticated way about large amounts of documents, so RAG is not suitable for reading large amounts of information, such as 'summarizing hundreds of thousands of emails' or 'analyzing thousands of hours of camera footage installed in a factory.'

From this, Ars Technica points out that 'today's LLMs are clearly subhuman in their ability to absorb and understand large amounts of information.'

Of course, it is true that LLMs absorb superhuman amounts of information when training. Modern AI models are trained on trillions of tokens, far more than humans can read or hear. But much of the information needed to develop specialized knowledge is not freely accessible, and specialized knowledge must be imparted to users when they are actually using the system, not during training. This means that AI models need to read and remember more than 2 million tokens when they are used, which is not easy.

Ars Technica points out that the reason for this is that recent LLMs use an operation called 'attention.' LLMs perform an attention operation before generating a new token, comparing the latest token with the previous token, but this becomes less efficient as the token gets larger.

In the first place, the reason for this type of calculation is due to the performance of the hardware. Originally, CPUs were used for AI processing, and hardware manufacturers initially responded by increasing the clock frequency, which can be thought of as the 'heartbeat' of the CPU, to improve CPU processing performance. However, in the early 2000s, problems such as CPU overheating began to appear, and the limitations of this method began to be seen.

Instead, hardware manufacturers started making CPUs that could execute multiple instructions at once, but they had to execute the instructions sequentially, which meant they weren't very fast. The solution to this was the GPU.

GPU manufacturer NVIDIA developed some GPUs that were good at quickly drawing in-game walls, weapons, monsters, and other objects made up of thousands of 'triangles.' These GPUs had 12 specialized cores that worked in parallel, rather than a single processor that executed instructions one by one, and could draw triangles in any order. Eventually, NVIDIA was able to manufacture GPUs with tens, hundreds, and eventually thousands of cores, and people began using them for things other than gaming.

In 2012, three computer scientists from the University of Toronto used two NVIDIA GTX 580s to train a neural network to recognize images. The massive computing power of the GPUs, with 512 cores each, allowed them to train a network with a then-staggering 60 million parameters, setting a new record for image recognition accuracy.

Researchers have begun to apply similar techniques to a variety of domains, including natural language. One of these is the recurrent neural network (RNN), an architecture for natural language understanding that processes language one word at a time and updates a list of numbers representing the sentences it has understood so far after each word is processed.

RNNs worked well with short sentences, but struggled with longer sentences. When inferring long sentences, RNNs would 'forget' important words early in the sentence. This was remedied in 2014, when computer scientists added a mechanism to RNNs that would 'look back at previous words in the sentence,' significantly improving performance.

However, in 2017, researchers at Google developed a mechanism called the Transformer that completely eliminated RNNs and lists of RNNs. By changing the mechanism from 'processing words in a sentence in order from the beginning' to 'processing all words simultaneously,' the researchers were able to take advantage of the parallel computing power of GPUs while eliminating a serious bottleneck in scaling up language models.

The Transformer has grown the processing power of major LLMs from hundreds of millions of parameters to hundreds of billions of parameters, but due to its feature of 'processing all words simultaneously,' the computational cost increases relentlessly as the number of tokens increases. For example, if a 10-token prompt requires 414,720 attention operations, this will be 45.6 million for 100 tokens and 460 billion for 10,000 tokens.

To optimize this operation, research is underway to extract maximum efficiency from the GPU. One such architecture is the 'Mamba' architecture, which has the potential to combine the performance of a transformer with the efficiency of a traditional RNN.

Ars Technica said, 'The benefits of handling long tokens are clear, but we don't know the best strategy for getting there. In the long term, interest in Mamba or other architectures may grow, or someone may come up with an entirely new architecture that surpasses Mamba and Transformer. If we want a model that can handle billions of tokens, we'll need to think outside the box.'

in Software, Posted by log1p_kr